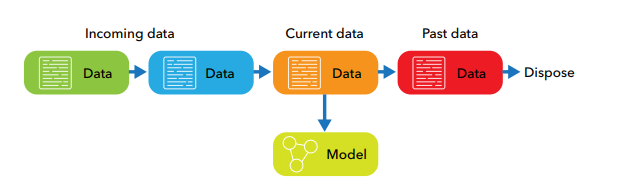

In the field of machine learning, online learning refers to the collection of machine learning methods that learn from a sequence of data provided over time. In online learning, models update continuously as each data point arrives.

You often hear online learning described as analyzing “data in motion,” because it treats data as a running stream and it learns as the stream flows. Classical offline learning (batch learning) treats data as a static pool, assuming that all data is available at the time of training. Given a dataset, offline learning produces only one final model, with all the data considered simultaneously. Online learning only looks at a single data point at a time. With each new data point, online learning makes a small incremental update to the model it built with past data. As the model receives more data points, the model gradually improves.

A few examples of classical online learning algorithms include recursive least squares, stochastic gradient descent and multi-armed bandit algorithms like Thompson sampling. Many online algorithms (including recursive least squares and stochastic gradient descent) have offline versions. These online algorithms are usually developed after the offline version, and are designed for better scaling with large datasets and streaming data. Algorithms like Thompson sampling on the other hand, do not have offline counterparts, because the problems they solve are inherently online.

Let’s look at interactive ad recommendation systems as an example. You’ll find ads powered by these systems when you browse popular publications, weather sites and social media networks. These recommendation systems build customer preference models by tracking your shopping and browsing activities (ad clicking, wish list updates and purchases, for example). Due to the transient nature of shopping behaviors, new recommendations must reflect the most recent activities. This makes online learning a natural choice for these systems.

Because of its applications in the era of big data, online learning has gained significant popularity and momentum. Three Vs characterize big data: volume, velocity and variety.

- Volume refers to the amount of data, both in the number of data points, and the number of variables per data point.

- Velocity refers to the speed of data accumulation. Modern sensors collect a huge amount of data at such an incredible speed, that analysts cannot keep pace. A typical NASA mission collects terabytes of data every day. The Large Hadron Collider (LHC) at CERN “generates so much data that scientists must discard the overwhelming majority of it – hoping hard they've not thrown away anything useful.”

- The variety of data complicates these situations. Data not only comes in different forms (image, audio and text), but also changes over time.

While not a cure-all, online learning’s “on the go” learning style is uniquely suited for many of the challenges brought by big data.

Advantages of online learning

Let’s dig a bit deeper into each of the three Vs and consider the benefits of online learning for each.

Volume: online learning requires smaller data storage

With high volume data, it is often infeasible to read in all data at once. Since online learning updates its model using only the newest data points, the system does not need to store a large amount of data in memory. Compared with offline learning, systems using online learning can maintain a much smaller amount of data storage. High throughput and limited memory applications can significantly benefit from online learning’s ability to generate a model from streaming data.

Velocity: online learning allows fast model updates

Online learning is designed with speed in mind. By processing only a small chunk of data at a time, online learning methods keep the computational complexity of each update small. When a model needs frequent updates, re-training offline models using offline learning methods can be extremely costly. Online learning methods, therefore, scale better than their offline counterparts. As a result, online learning is the natural choice for applications with strict time budgets, such as real-time audio/video processing or web applications where response time needs to be on the order of milliseconds.

Variety: online learning adapts better to changes in data

Many online learning methods have a “forgetting factor” that allows the user to set a speed at which the methods “forget” the past. Online learning achieves this by gradually discounting the importance of past data. As a result, the model automatically adapts to changes in the dataset, such as market changes and customer behavior shifts. Conversely, sudden changes in the model can also indicate changes in data, which can help users identify changes in the market. Offline models are not ideal candidates for change detections as they are typically updated less frequently and can cause significant detection delays.

Of course, online learning methods also face some challenges. Many online learning methods need good initializations to avoid long convergence time, and frequent model updates can cause unwanted model output fluctuations. Also, models trained by online learning methods are generally more difficult to maintain, as online models can become unstable or corrupted due to contaminated data. In order to keep the model accurate and stable, an online learning service provider needs to continually monitor the data and model quality.

Despite these challenges, online learning has become one of the many invaluable toolkits in the field of machine learning due to its capabilities in high speed data processing and its fast adaptation to data changes.

This is the second post in my series about machine learning concepts. Read the first post about machine learning styles, or download the paper, "The Machine Learning Landscape," to learn more about machine learning opportunities.