CAS Table

SAS Viya’s in-memory tables are referred to as a CAS table and are accessed using a CAS Engine. In this post, we will explore how one can parallel load and compress a CAS table in one pass of the CAS table.

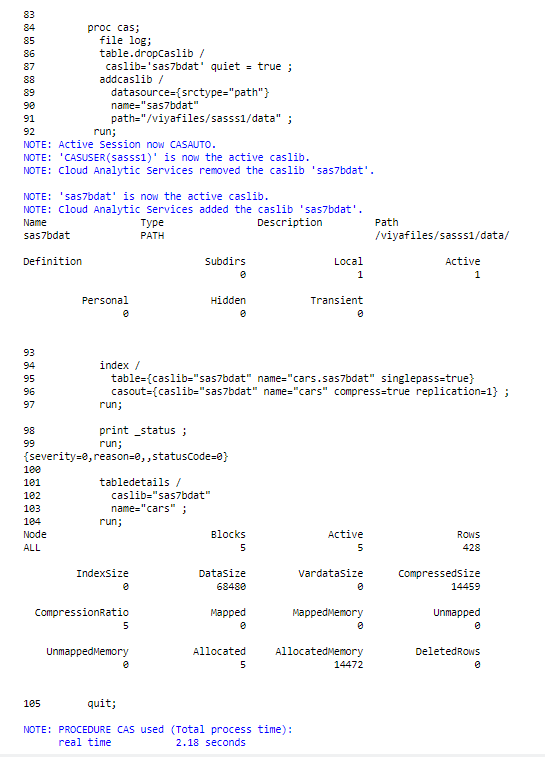

Note: When not using this technique (i.e. PROC CASUTIL with a COMPRESS option) your loading of a CAS table will be a single-threaded process (slower). To understand the following code, which can act as a template for you, see Figure 1, we will review the SAS Log of this code in Figure 2.

proc cas; file log; table.dropCaslib / caslib='sas7bdat' quiet = true; addcaslib / datasource={srctype="path"} name="sas7bdat" path="/viyafiles/sasss1/data" ; run; index / table={caslib="sas7bdat" name="cars.sas7bdat" singlepass=true} casout={caslib="sas7bdat" name="cars" BLOCKSIZE=536870912 compress=true replication=0} ; run; print _status; run; tabledetails / caslib="sas7bdat" name="cars" ; run; quit; |

Figure 1. Template of SAS Code to Parallel Load and Compress a CAS Table in One Pass of the CAS Table

To accomplish the parallel load and compression of a CAS table, we will leverage PROC CAS. Let’s review the SAS log in Figure 2:

- Line 85 utilizes the FILE LOG statement to redirect the contents we would normally see in the results window directly to the SAS Log i.e. the information between lines 92 and 93 as well as lines 103 and 104. Note: using the FILE LOG statement is optional.

- On lines 86-92 we are dropping and creating our CASLIB to point to the file system path that contains the SAS7BDAT data set that we want to load and compress, i.e. CARS.

- On line 87 we added the option QUIET = TRUE to our statement. This is a very handy trick to avoid the ERROR message we get in the SAS Log in Figure 3. If you omit this option an ERROR message will be produced if you have a brand-new CAS Session and our SAS7BDAT CASLIB has not be defined to that session.

- Lines 88-91 created our CASLIB to our SAS7BDAT data sets.

- Lines 94-96 are the statements that accomplish the parallel load and compression of our CAS table.

- On line 94 we use the INDEX statement which is always executed in parallel. Notice we are not creating any indexes in our example but simply using the INDEX statement to activate the parallel load.

- On line 95 we identify the CASLIB pointing to our source data set CARS.SAS7BDAT. We are also using the option SINGLEPASS = TRUE which means our CAS table will be loaded and compressed as each thread adds a row to our CAS table.

- On line 96 we are saving our CAS table to our CASLIB SAS7BDAT and naming it CARS. The COMPRESS = TRUE options ensures our CAS table will be compressed and the REPLICATION = 1 ensures our CAS table is replicated i.e. 2 copies of the CAS table, to ensure high availability of the CAS table.

- On line 98 will print to the SAS Log information telling use that the table was loaded and compressed successfully i.e. {severity=0,reason=0,,statusCode=0}.

- Lines 101-103 provide information on our compressed CAS table. Reviewing this information, we can see our CAS table has a compression ratio of 5.

Figure 2. SAS Log to Parallel Load and Compress a CAS Table in One Pass of the CAS Table

Figure 3. To Avoid this ERROR Message, We Will Add to Line 76 the option QUIET = TRUE, See Line 87 in Figure 2.

Conclusion

When loading multiple CAS tables it is a common practice to compress CAS tables to help avoid the paging of our CAS tables to CAS DISK_CACHE. Paging to CAS_DISK_CACHE impacts performance. In addition, one can parallel load and compress source tables from various formats i.e. CSV files, Hadoop tables as well as many others relational databases, such as Oracle, Teradata and so on.