JSON is the new XML. The number of SAS users who need to access JSON data has skyrocketed, thanks mainly to the proliferation of REST-based APIs and web services. Because JSON is structured data in text format, we've been able to offer simple parsing techniques that use DATA step and most recently PROC DS2. But finally*, with SAS 9.4 Maintenance 4, we have a built-in LIBNAME engine for JSON.

RECOMMENDED READING | How to read JSON data in SAS (SAS Community)Simple JSON example: Who is in space right now?

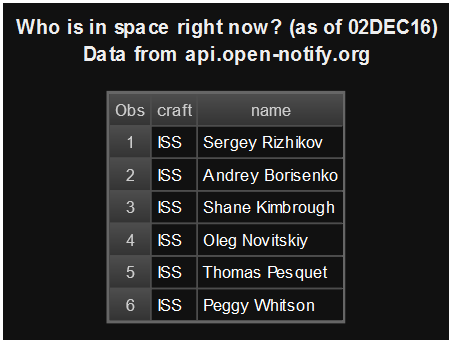

Speaking of skyrocketing, I discovered a cool web service that reports who is in space right now (at least on the International Space Station). It's actually a perfect example of a REST API, because it does just that one thing and it's easily integrated into any process, including SAS. It returns a simple stream of data that can be easily mapped into a tabular structure. Here's my example code and results, which I produced with SAS 9.4 Maintenance 4.

filename resp temp; /* Neat service from Open Notify project */ proc http url="http://api.open-notify.org/astros.json" method= "GET" out=resp; run; /* Assign a JSON library to the HTTP response */ libname space JSON fileref=resp; /* Print result, dropping automatic ordinal metadata */ title "Who is in space right now? (as of &sysdate)"; proc print data=space.people (drop=ordinal:); run; |

But what if your JSON data isn't so simple? JSON can represent information in nested structures that can be many layers deep. These cases require some additional mapping to transform the JSON representation to a rectangular data table that we can use for reporting and analytics.

JSON map example: Most recent topics from SAS Support Communities

In a previous post I shared a PROC DS2 program that uses the DS2 JSON package to call and parse our SAS Support Communities API. The parsing process is robust, but it requires quite a bit of fore knowledge about the structure and fields within the JSON payload. It also requires many lines of code to extract each field that I want.

Here's a revised pass that uses the JSON engine:

/* split URL for readability */ %let url1=http://communities.sas.com/kntur85557/restapi/vc/categories/id/bi/topics/recent; %let url2=?restapi.response_format=json%str(&)restapi.response_style=-types,-null,view; %let url3=%str(&)page_size=100; %let fullurl=&url1.&url2.&url3; filename topics temp; proc http url= "&fullurl." method="GET" out=topics; run; /* Let the JSON engine do its thing */ libname posts JSON fileref=topics; title "Automap of JSON data"; /* examine resulting tables/structure */ proc datasets lib=posts; quit; proc print data=posts.alldata(obs=20); run; |

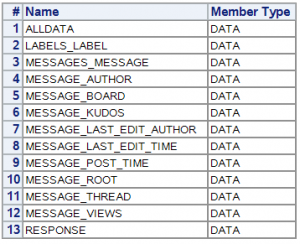

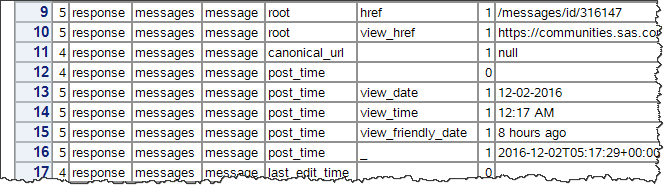

Thanks to the many layers of data in the JSON response, here are the tables that SAS creates automatically.

There are 12 tables that contain various components of the message data that I want, plus the ALLDATA member that contains everything in one linear table. ALLDATA is good for examining structure, but not for analysis. You can see that it's basically name-value pairs with no data types/formats assigned.

I could use DATA steps or PROC SQL to merge the various tables into a single denormalized table for my reporting purposes, but there is a better way: define and apply a JSON map for the libname engine to use.

To get started, I need to rerun my JSON libname assignment with the AUTOMAP option. This creates an external file with the JSON-formatted mapping that SAS generates automatically. In my example here, the file lands in the WORK directory with the name "top.map".

filename jmap "%sysfunc(GETOPTION(WORK))/top.map"; proc http url= "&fullurl." method="GET" out=topics; run; libname posts JSON fileref=topics map=jmap automap=create; |

This generated map is quite long -- over 400 lines of JSON metadata. Here's a snippet of the file that describes a few fields in just one of the generated tables.

"DSNAME": "messages_message",

"TABLEPATH": "/root/response/messages/message",

"VARIABLES": [

{

"NAME": "ordinal_messages",

"TYPE": "ORDINAL",

"PATH": "/root/response/messages"

},

{

"NAME": "ordinal_message",

"TYPE": "ORDINAL",

"PATH": "/root/response/messages/message"

},

{

"NAME": "href",

"TYPE": "CHARACTER",

"PATH": "/root/response/messages/message/href",

"CURRENT_LENGTH": 19

},

{

"NAME": "view_href",

"TYPE": "CHARACTER",

"PATH": "/root/response/messages/message/view_href",

"CURRENT_LENGTH": 134

},

By using this map as a starting point, I can create a new map file -- one that is simpler, much smaller, and defines just the fields that I want. I can reference each field by its "path" in the JSON nested structure, and I can also specify the types and formats that I want in the final data.

In my new map, I eliminated many of the tables and fields and ended up with a file that was just about 60 lines long. I also applied sensible variable names, and I even specified SAS formats and informats to transform some columns during the import process. For example, instead of reading the message "datetime" field as a character string, I coerced the value into a numeric variable with a DATETIME format:

{

"NAME": "datetime",

"TYPE": "NUMERIC",

"INFORMAT": [ "IS8601DT", 19, 0 ],

"FORMAT": ["DATETIME", 20],

"PATH": "/root/response/messages/message/post_time/_",

"CURRENT_LENGTH": 8

},

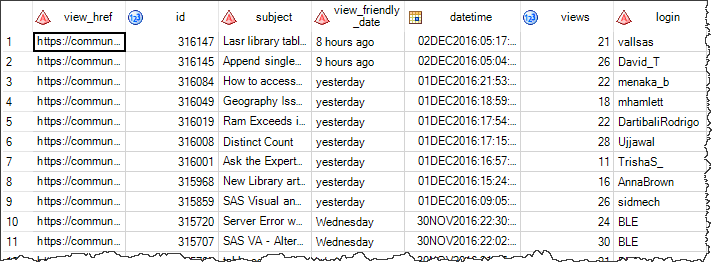

I called my new map file 'minmap.map' and then re-issued the libname without the AUTOMAP option:

filename minmap 'c:\temp\minmap.map'; proc http url= "&fullurl." method="GET" out=topics; run; libname posts json fileref=topics map=minmap; proc datasets lib=posts; quit; data messages; set posts.messages; run; |

Here's a snapshot of the single data set as a result.

I think you'll agree that this result is much more usable than what my first pass produced. And the amount of code is much smaller and easier to maintain than any previous SAS-based process for reading JSON.

Here's the complete program in a public GitHub gist, including my custom JSON map.

* By the way, the JSON libname engine actually made its debut as part of SAS Visual Data Mining and Machine Learning, part of SAS Viya. This is a good example of how work on SAS Viya continues to benefit the users of the SAS 9.4 architecture.

58 Comments

This is the good news for Christmas!

Pingback: Using SAS DS2 to parse JSON - The SAS Dummy

Really useful post, Chris. Thank you.

I’ve been using a lot of web services that return JSON over the last couple of years. I had used Groovy then moved to Python.

Your post prompted me to update to SAS9.4 M4 and try the new JSON engine.

To test the engine, I adapted your ‘space’ example to query the Google Distance Matrix API. I had previously written this in Python and SAS. Now it’s a few lines of SAS code. Job done.

This makes enriching data with external web services a lot simpler. There's lots of potential to be creative here.

Thanks again.

So glad it helped, Neil! With a robust PROC HTTP and now the JSON libname engine, I think we'll see lots of cases where we can reduce the context switching of processes. Fewer moving parts == fewer points of failure!

Come on...!

I have been on a mission trying to get people to understand how great PROC Lua is and my big finale has been my solution for parsing JSON with Lua.

Chris, do you have any insights on change in Lua support in 9.4M4?

Sorry to steal your thunder here, Peter. PROC LUA is of course still supported and I see some minor fixes went in, but no big changes that I'm aware of.

An alternative for those of us struggling without the M4-release if I may?

You can download and parse JSON using Powershell.

Use the FILENAME PIPE with a powershell-command of Invoke-WebRequest/ConvertFrom-JSON. Then its easy to put things into place using PRX-commands.

Great tip, Peter. Thanks! I have several blog posts about PowerShell and SAS that might help people who are getting started.

Pingback: Reporting on GitHub accounts with SAS - The SAS Dummy

I have a json file that has been handed to me. I am trying to read it using the libname engine

libname bmk JSON '/json/test.json';

I have also tried

filename resp '/json/test.json';

/* Assign a JSON library to the HTTP response */

libname bmk JSON fileref=resp;

but get the same error either way.

NOTE: JSON data is only read once. To read the JSON again, reassign the JSON LIBNAME.

BMK Ø]Î

ERROR: Error in the LIBNAME statement.

what am I missing?

We are running 9.4.4.0

Is the JSON file very large, or does it contain very long records (lines)? You might need to specify a large LRECL value:

filename resp '/json/test.json' LRECL=1000000;

Another possibility is that the file contains special characters that cannot be processed in your current session encoding. You might need to try running SAS with ENCODING=UTF8, or at least try:

filename resp '/json/test.json' LRECL=1000000 encoding='utf-8';

I am able to get this working with a simple json. But when I use the one I was given I still get the issue. I noticed that when I do a proc print and you have the 'p' variables, it works to p9, but when I go to p10 that is when it dies.

Any thoughts on that?

I found that bringing the data into a SAS data set (using DATA step and a SET statement) is the best approach, before trying to run any PROCs on it. Use the automap and map= options to see the structure of the data first, and perhaps shape the data you get coming in.

If you continue having issues, you might need to work with SAS Tech Support and supply an example of the JSON that's failing. I'm happy to take a look too if you send it to me (chris.hemedinger@sas.com).

Pingback: Using SAS to access Google Analytics APIs - The SAS Dummy

Hi, I'm trying to run this code in EG 7.15, but getting the error below. What am I missing?

filename resp temp;

/* Neat service from Open Notify project */

proc http

url="http://api.open-notify.org/astros.json"

method= "GET"

out=resp;

run;

/* Assign a JSON library to the HTTP response */

libname space JSON fileref=resp;

/* Print result, dropping automatic ordinal metadata */

title "Who is in space right now? (as of &sysdate)";

proc print data=space.people (drop=ordinal:);

run;

ERROR:

ERROR: The JSON engine cannot be found.

ERROR: Error in the LIBNAME statement.

56

57 /* Print result, dropping automatic ordinal metadata */

58 title "Who is in space right now? (as of &sysdate)";

59 proc print data=space.people (drop=ordinal:);

ERROR: Libref SPACE is not assigned.

I'd guess your SAS session is not running SAS 9.4 Maint 4 or later. Run "proc product_status; run;" to verify the level of SAS that you have -- the answer will be in the log.

Hi do I need a SAS ACCESS license for accessing JSON data with other tools like base, VA or VDMML?

No! The JSON library engine is part of Base SAS, and is also part of SAS Viya (where VDMML is based).

Hi Chris,

Thanks for this post. It is very informative. I recently been given a file which is in json format and I'm running into some difficulties which perhaps you could give some advice on.

My json file contains circa 500,000 records - in json format.

When I use the json engine, SAS parses the file into manageable datasets

filename in "\\location\BigSimA1000C100D10.txt";

filename map 'my.map';

libname in_json json map=map automap=reuse;

However, the following problem is occurring.

One of the datasets which sas creates is called "address". The json engine is separating this into one dataset. However, a number of records contain no information of this kind. So if the first reference of address doesn't appear until record 26 SAS is assigning an ordinal root key of 1 as it's the first time it encounters it.

Therefore, if I start linking back up, I'll be linking to the wrong record. This type of issues will be the same for other cases where a variable isn't on a record.

So I need ensure an accurate unique ID is on each table.

Would your example above deal with this?

I also tried your example code above;

filename jmap "%sysfunc(GETOPTION(WORK))/top.map";

proc http

url= "&fullurl."

method="GET"

out=topics;

run;

libname posts JSON fileref=topics map=jmap automap=create;

However, when I run this, along with all the previous steps above, no file is put in my work directory. Is there any reason why this is happening?

Chris,

I've been using this mapper and it works fine. I have a large json file 12million records, which I firstly want to split out into files of 100k and then create sas datasets out of them.

Is it possible when your using the json libname command to directly read the datasets out into a folder?

Sean, the JSON engine is a read-only method of parsing the JSON into structures that look like SAS data sets. You then use DATA step, PROC SQL, or PROC COPY to transform or copy the parts of the data you want to save into true SAS data sets and save those into a permanent location, designated with a LIBNAME statement. You can use LIBNAME to create new folders in any location and direct your output to there.

Pingback: Read RSS feeds with SAS using XML or JSON - The SAS Dummy

How can you map an array of values with the json engine without using value1 value2 etc? i basically need both metric name and all values in two columns ( heres a example json https://gist.github.com/MrDibbley/153ce9042f8b89653444c4154c2825a7 )

Looks like you need to transpose the result, something like this:

Then you can join with the other data set:

Thanks Chris, the transpose was what was needed. I used a proc sql for my final desired result:

proc sql;

create table tempsql as

select t2.metric,

t1.values

from allvalues t1

inner join page.stats_specific_responses t2 on (t1.ordinal_stats_specific_responses =

t2.ordinal_stats_specific_responses);

quit;

Hi Chris,

I sent a note to your blog on Friday (NZT) about getting an ERROR message in the SASLOG when I read a NULL value for a datetime in my JSON Response with a customised JSON Map.

I have managed to solve the problem now using a custom datetime informat which handles NULLs through the OTHER attribute, which I had thought was the correct route on Friday before I put my hand up and asked for help, but just couldn't get it to work.

The Informat looks like this:

Proc Format Library=WORK;

InValue DaveDateTime "1900-01-01T00:00:00"-"9999-12-31T23:59:59"=[E8601DT19.]

Other=.

;

Run;

....and I reference it in the JSON Map, like your example:

{

"NAME": "modifiedon",

"TYPE": "NUMERIC",

"INFORMAT": [ "DAVEDATETIME", 19, 0 ],

"FORMAT": ["DATETIME", 20],

"PATH": "/root/value/modifiedon",

"CURRENT_LENGTH": 19

},

I was going to post my solution to the SAS Community Library but it appears I'm not yet at the right level for that, yet. Ho hum.

Please scratch my request, but please do keep doing the Blog, it's a treasure trove.

Cheers,

Dave Shea

Wellington

Glad you got it solved! I've made sure that you have article privileges on the communities -- so please, feel free to share!

Chris,

we are testing SAS 9.4M6 now, and executed the example code. This worked fine.

But when i click on the library SPACE and want to open this dataset, I get an error.

Does that sound familiair ?

thanks.

filename resp temp;

proc http

url="http://api.open-notify.org/astros.json"

method= "GET"

proxyhost= "nl-proxy-access.net.abnamro.com:8080"

out=resp;

run;

libname space JSON fileref=resp;

title "Who is in space right now? (as of &sysdate)";

proc print data=space.people (drop=ordinal:);

run;

ERROR:

'source array was not long enough. Check srcIndex and length, and the array's lower bounds'

I can't say -- you might want to check that you're actually getting a JSON response with data.

Try adding:

%macro prochttp_check_return(code); %if %symexist(SYS_PROCHTTP_STATUS_CODE) ne 1 %then %do; %put ERROR: Expected &code., but a response was not received from the HTTP Procedure; %abort; %end; %else %do; %if &SYS_PROCHTTP_STATUS_CODE. ne &code. %then %do; %put ERROR: Expected &code., but received &SYS_PROCHTTP_STATUS_CODE. &SYS_PROCHTTP_STATUS_PHRASE.; %abort; %end; %end; %mend; %prochttp_check_return(200); %macro echoResp(fn=); data _null_; infile &fn; input; put _infile_; run; %mend; %echoResp(resp);To diagnose.

Hi Chirs,

Excellent blog on use of "proc http" and json engine. very useful. Thanks

Based on your examples of using proc http in this and many other blog , I started working on getting data from salesforce with use of proc http.

I was able to get Oauth token using proc http , use it to fetch data from salesforce object by passing SOQL statement.

One challenge I faced was how salesforce returns records in Jason file. Based on limit set by administrator it gives certain number of records at a time. Along with that it also give nextURL parameter to use for next 500 record fetch.

https://developer.salesforce.com/docs/atlas.en-us.api_rest.meta/api_rest/dome_query.htm

For example : If I have 50000 records on salesforce table then it will return 500 records and nexturl parameter which we have to use to get next 500 records and so on.

So I have to take nexturl in macro variable and run proc http in loop to get all records. But it creates lots of JSON files. In this example 100 Json files.

Is there a way to concatenate all of these json fie in single file and then convert to sas table? I can not convert them to SAS tables and concatenate. This is because if one variable have some character value in first 10 records but missing in rest of all 50000, first json file will create that variable as character but rest of json files will have that variable as numeric. so there will be type mismatch. But if we can concatenate all json file to single and then convert in SAS table, it might solve issue.

Is there any way to concatenate multiple json files in SAS? Or any other suggestions.

Thanks

Pallav

Pallav,

You might be able to post-process the JSON files to "wrap" them in an outer JSON object, but instead, I recommend that you use the JSON map (my second example) to control how SAS reads the fields. If you need the value to be treated as character, then using the map file will ensure that happens consistently with each set of JSON data.

Hey Chris , just to update . I was able to complete this via use of proc json . I used proc json with export statement to append multiple json filed to single json file. Didn't explore json map yet but is in my to explore list.

Thanks

Pallav Lodhia

Can someone give me an example of using the json output generated by the Data-Driven-Content in Visual Analytics and save it to a castable?

Should be wrapped into the htm code that is called by the DDC.

That would be great :)

Arne - I see you asked this on SAS Support Communities too...so let's address it there.

Hi Chris, thanks for this great article.

I work on SAS EG 7.1, is it possible to install JSON engine?

Thanks for you help and please excuse my bad english i'm french.

Soq,

The JSON engine is part of SAS 9.4M4 - it's independent of SAS Enterprise Guide. Check your SAS version (proc product_status; run;) to see that you've got the level needed. SAS 9.4 Maint 4 was released in November 2016.

Hi Chris,

Thanks for all your help. I have made my own custom map as I have to parse my json files (batches of 100k) each month.

However, in my custom map, for character variables I would like to increase the size of any given variable to ensure it doesn't become truncated. However, when I increase the length size in the current length column, example below it doesn't feed into the SAS file e.g. the length is not 100.

I wonder could you provide some insight into this? Basically I want to future proof my program to ensure no character variables become truncated.

"NAME": "employerRegistrationId",

"TYPE": "CHARACTER",

"PATH": "/root/employerRegistrationId",

"CURRENT_LENGTH": 100

Almost there. Just add an additional attribute:

"LENGTH": 100

CURRENT_LENGTH is the expected length of the JSON value, and LENGTH is like a SAS LENGTH statement that actually allocates the space.

Thanks Chris. I assume I should get rid of the line Current_length and replace it with the length statement?

Not necessary to remove. Think of "CURRENT_LENGTH" as "incoming length" and "LENGTH" as final length of the variable to store.

Thanks Chris. Just one final thing. When I use proc copy to copy my dataset over into a permanent library I get the following warning;

WARNING: Data truncation occurred on variable description in data set EVERYTHING, variable length 20, data length 90.

I have been changing the length of this to see if it makes a difference but it doesn't appear to.

Any idea what is going on?

Did you add a LENGTH: attribute to "description" field as well in your map? Usually that message is an indicator that the length allocated isn't what you thought it should be.

Oh that's interesting. This actually might save me time in updating my map.

How does sas json work when assigning variable lengths?

For instance if I have a json file with 50,000 records, will sas read in all the records before assigning an ultimate column length?

Basically, I'm trying to future proof truncation of character variables but if sas is waiting until it reads the last row before it assigns a length then this takes var of my issue.

A bit like using a proc import and guessing rows to max

I *think* that SAS does read the entire JSON before assigning the final length. In many of my cases, I'm reading multiple JSON sets (multiple libname statements) and then assembling into a larger table. In that case, the first table in determines the length and if others have longer values, they get truncated. So I try to explicitly set the length to the max size I'll need.

Hi, I'm new to SAS. I used the tips in this article. I received a request for a json file, but I can't apply encoding.

By default file encoding would fall to your SAS session encoding (ENCODING= option). You can apply a different encoding using the FILENAME statement. JSON (especially from the web) works best when your encoding is UTF-8.

this is the part of the code where I want to apply a different encoding from the SAS server (by default, val=CYRILLIC). but I don't get the desired result with a single variable. However, the proc print step succeeds, but I need to continue working with the data.

libname resp_js json fileref=out;

filename level1 "resp_js.list" encoding='utf-8';

data pure_js(drop= ordinal_root ordinal_list);

set level1;

run;

thanks!

The data you're reading from JSON needs to be transcoded into the SAS session encoding, and it's possible that some characters aren't able to come over. If you have any way to run SAS using ENCODING=UTF8, I suggest doing that. Any Cyrillic characters should render fine.

Thank you for advice. But the possibility to change the encoding of the server, other users will not be comfortable. Are there any workarounds for this problem? Thanks.

I believe the JSON engine tries to use utf8 by default. If your JSON content is actually encoded differently, use ENCODING= on the JSON libname statement to influence how it reads the data.

If the session encoding is the culprit, is it possible to start a SAS session with the encoding you need just for this purpose, to read this data? If working with a central environment, perhaps your admin can create a "SASApp - UTF8" logical server that you can use to get the data into a SAS data set.

Thank you

Pingback: How to test PROC HTTP and the JSON library engine - The SAS Dummy

This is an amazing and detailed blog. Thank you so much.

However I have a question on how to extract the data into a dataset if the data that needs to be extracted is object and not element.

Example:

"DSNAME": "messages_message",

"TABLEPATH": "/root/response/messages/message",

"VARIABLES": [

"abcd",

"efge",

"ighj"

]

This is a JSON array value. By default, the SAS JSON engine will place each of these values in its own column within a table. You can then use PROC TRANSPOSE or a DATA step to restructure the data from "wide" to "long".

This is a great article, and from 6 years back! Thank you Chris. I will be working on getting bitcoin data using the JSON API. Am sure will have some questions later on. But your blog explains it very clearly.

Jit

Is there an option to tell SAS to treat all arrays as simple text strings? I don't want them extracted from the dataset nor do I want them parsed into multiple columns. Seems like quite a bit of unnecessary work to stitch the Value1 ... ValueN columns back together and re-merge them back into the original dataset from which they were needlessly removed in the first place.

I agree it's inconvenient, but I'm not aware of a way to tell the JSON libname engine to "please don't parse" some segments of data.

Another approach, if you have SAS Viya, is to use PROC PYTHON to apply Python methods to that and transform the data as you want it, then convert from a data frame to a data set.

Thank you for the prompt reply. Will do.