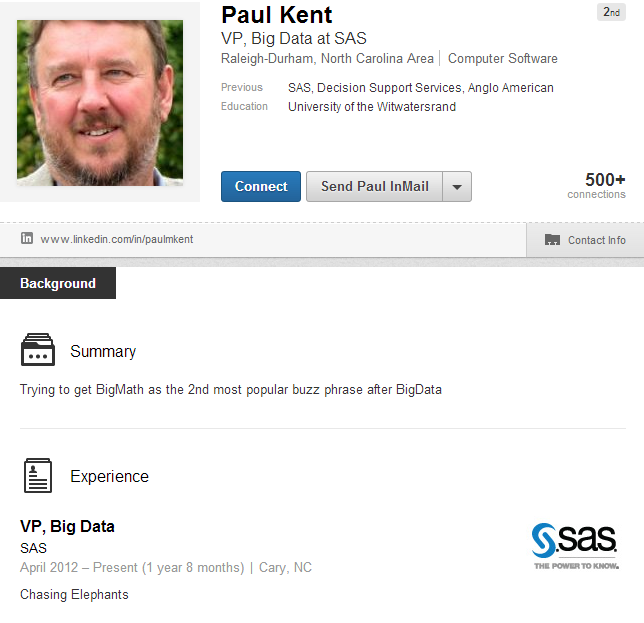

If you look up Paul Kent on LinkedIn, you’ll find a two-word description for his job at SAS that says, simply: Chasing elephants.

If you look up Paul Kent on LinkedIn, you’ll find a two-word description for his job at SAS that says, simply: Chasing elephants.

Are you picturing a Disney cartoon? Or an over-sized circus act? What if I told you that Kent’s official title is SAS VP of Big Data? Now, do you know what elephants we’re talking about?

We’re talking about Hadoop.

“My job has been to make big data analytics fit into the data center,” says Kent. He tells a great story about the evolution of high-performance analytics at SAS, and he tells it without the aid of PowerPoint slides or intricate data center drawings. Instead, he uses a dry erase board and a dozen magnets.

The story Kent tells is a tale of developing new software products, re-writing code and working closely with database partners to handle big data problems on all types of data. Sounds complex, but the final result – like most good programming – is simple and can be boiled down to three simple truths:

- Let the math communicate.

- Give the math a place to work.

- Feed the data to the math as quickly as possible.

1. Let the math communicate

This first point refers to parallelism, where complex math problems are broken down into groups of calculations that can be processed at the same time. Those separate calculations work their math on individual sections of the data and come back together to summarize a final answer.

This type of parallelism is common across the industry, but SAS developers realized early on that creating a way to communicate back and forth between the individual calculations would be important.

To explain the importance of this, Kent will often ask an audience to work together to find the average age of attendees in the room. If the room contains multiple tables of attendees, each table might calculate its own average but eventually, the tables need to come together and work out a total average. It takes communication to find that final answer.

“Without this ability to communicate between the individual units of parallelism, you have to send another query into the database. You can get a partial answer, aggregate it and calculate some more and keep going. It works, but it’s not the quickest, not the best way to solve the complete problem.”

To create math that communicated, SAS developers rewrote the company’s code to run calculations in parallel on separate nodes of data, “talk” through the calculations as needed, and come back together to discuss the final answer very quickly.

2. Give the math a place to work

Originally, SAS set out to run its new math on large sections of data inside the database. This was achieved through in-memory, parallel computing that took place inside Teradata or EMC Greenplum databases, for example. With SAS inside the database, the results for calculating basic averages, summations and complicated math such as logistic regression were very, very fast. But, says, Kent, this structure created some challenges. Data centers needed to set aside a section of their database hardware to run the math, and that wasn’t easy for companies whose Enterprise Data Warehouse machines were resource constrained.

“Their systems are so well engineered to do data warehousing tasks that there’s not a lot of space left for the math,” says Kent.

The solution came in the form of an analytic server where SAS could reside separately. This way, the mathematical formulas can live inside their own custom environment that is created specifically for analytics. This custom environment sits alongside the database instead of inside it and is sometimes called a SAS in-memory analytic server or a high-performance analytic server. But the SAS developers, who make the math fast, like to use its sci-fi inspired name, the SAS LASR server.

“This model fits better in IT,” says Kent. “They don’t need new data warehouses. They can buy an appliance with SAS mathematics and they can put it near the data in the center.”

3. Feed the data to the math as quickly as possible

The final piece of the puzzle is getting the data in to the LASR Server. “We have to feed the data into the math as quickly as possible,” says Kent. To do this, SAS developers engineered special routers or connectors for different database environments that employ the network technologies native to that database.

So, the data can be stored in Teradata, Oracle, IBM or EMC Greenplum, and SAS connects to it, pulls in the sections of data it needs to run its calculations and then offers an answer faster than ever before.

The first tests in this new environment surprised even Kent. “This configuration got about 109 percent of the throughput than before. I was expecting to pay some tax from going through the network wires. But the SAS nodes got to focus on doing their SAS things, and the EDW nodes got to focus on doing their data warehouse things. Something interesting goes on there, and the spreading of the problem was a success.”

The same concept works with data in Hadoop too. That’s where the “Chasing elephants,” moniker comes in, after all. “Today, everyone is playing with Hadoop,” says Kent. “Not all of their data needs to be in gold plated storage, so they’re experimenting with cheaper platforms for the massive amounts of data flying in from the Web and elsewhere.”

The beautiful thing about this, says Kent, is that the same networking technology that hooks into the data warehouse can hook up to more than one data storage device, so SAS can analyze data from the EDW and Hadoop at the same time.

Learn more about big data by downloading this Big Data in Big Companies research report.