In part one of this blog posting series, we introduced that the analytics lifecycle is much more than authoring models. As brands develop and invest into creating models to solve critical business problems, so does the requirement to manage these assets as valuable competitive differentiators.

In part two of this series, we will walk through best practices regarding the management of analytical assets.

Demonstrating how brands manage actionable analytical assets

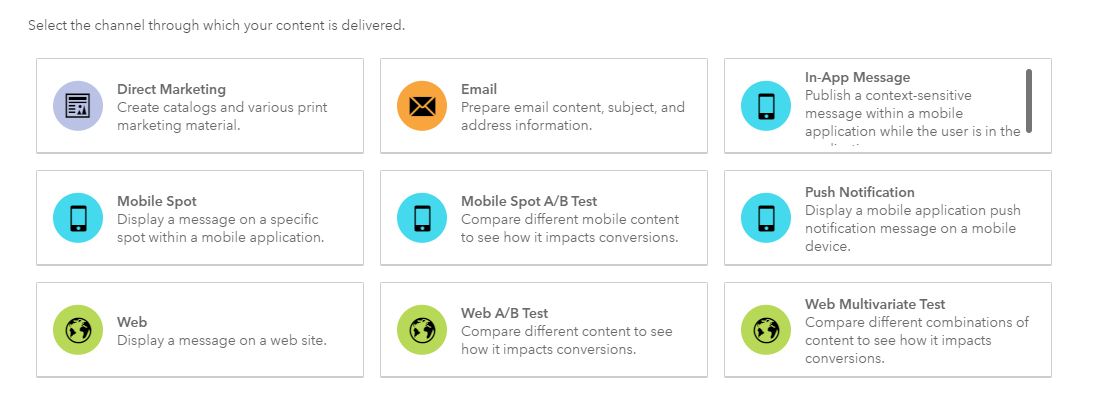

Now that you have an analysis selected worthy of addressing your marketing team’s business problem, you can begin managing the models for deployment. Options include:

- Publishing of models for scoring directly in

databases.

databases. - Score holdout data.

- Import scoring of other models to compare.

- Download score code in multiple languages.

- Download scoring API for invoking models as web services.

Users can store models in a common model repository and organize them within projects and folders. A project consists of the models, attributes, tests and other resources that you use to:

- Evaluate models for champion model selection.

- Monitor model performance to minimize exposure to decaying predictions.

- Publish models to other areas of the SAS platform or third-party technologies.

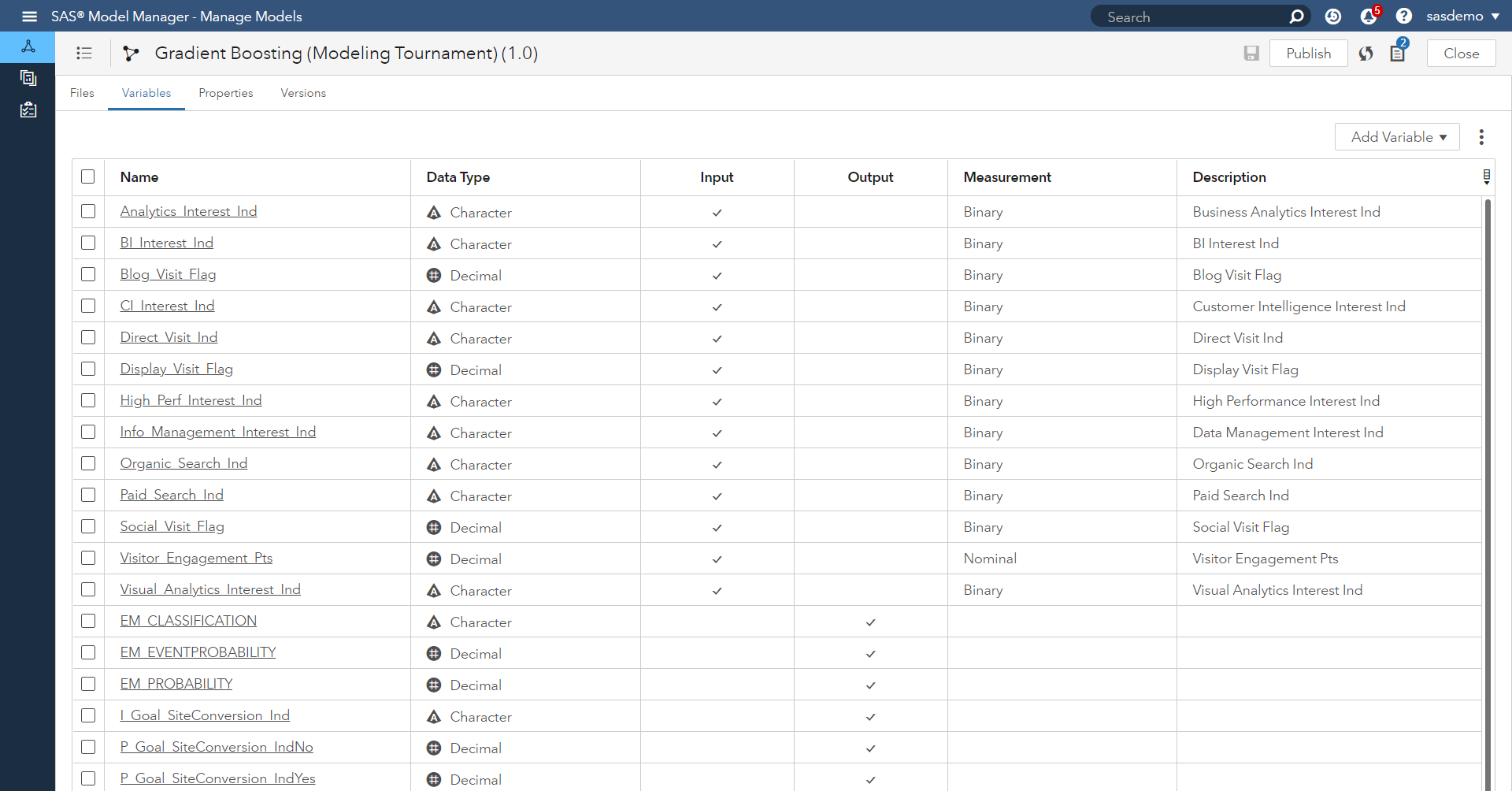

All model development and maintenance personnel, including data modelers, validation testers, scoring officers and analysts, can use and benefit from these features. We begin by opening the gradient boosting model in Figure 2 to highlight how users can add, review and customize the model’s input and output variables.

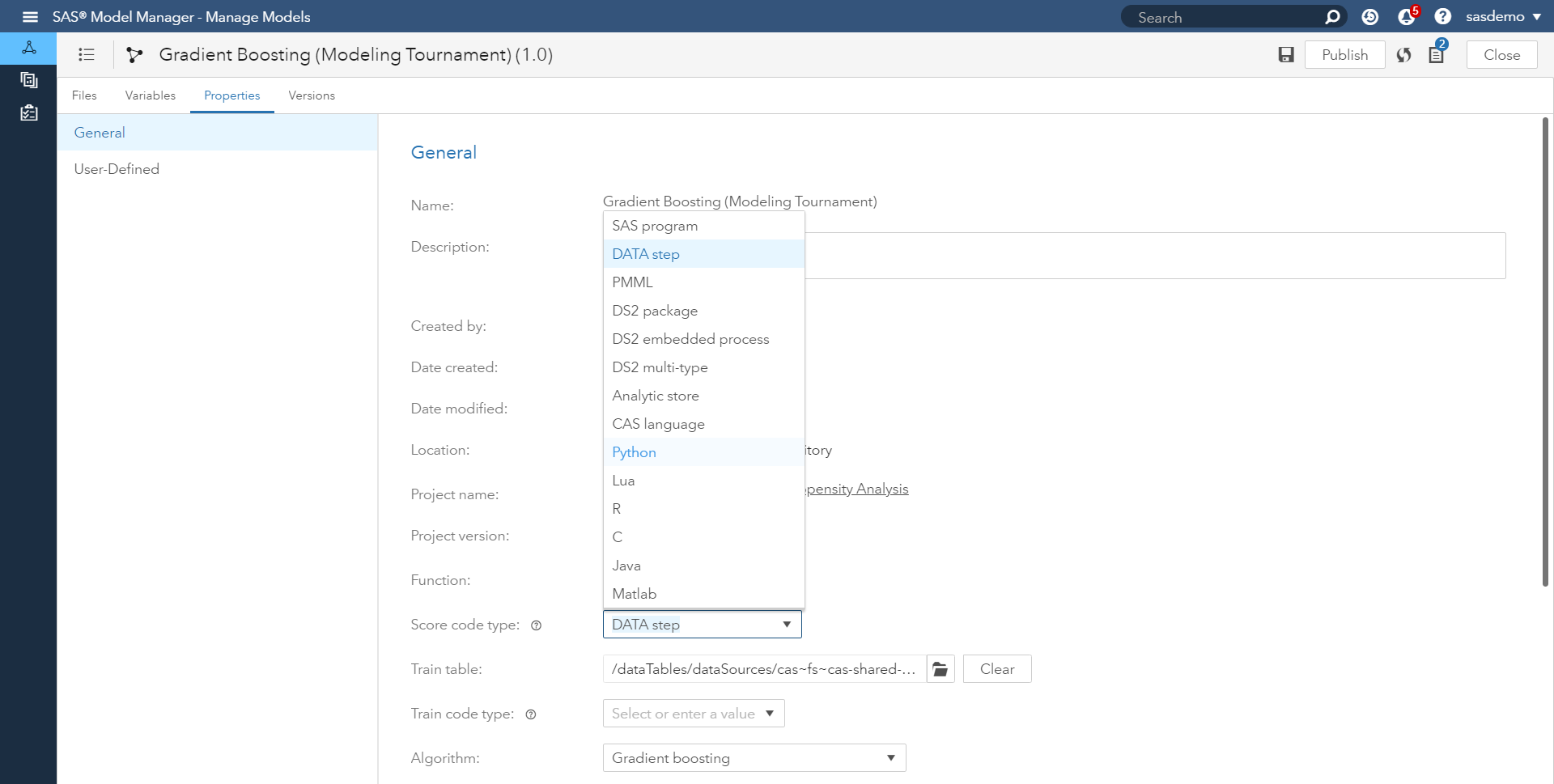

The project’s metadata includes information such as the name of the project, the type of model function (classification, clustering, prediction, forecasting, etc.), the project owner, the project location and the variables that are used by project processes. Want to deploy model scoring code in SAS, CAS, R, Python or another language within the model’s properties?

Figure 3 below highlights your options.

Model validation processes can vary over time. One thing that is consistent is that every step of the validation process needs to be logged. For example:

- Who imported what model when?

- Who selected what model as the champion model, when and why?

- Who reviewed the champion model for compliance purposes?

- Who published the champion to where and when?

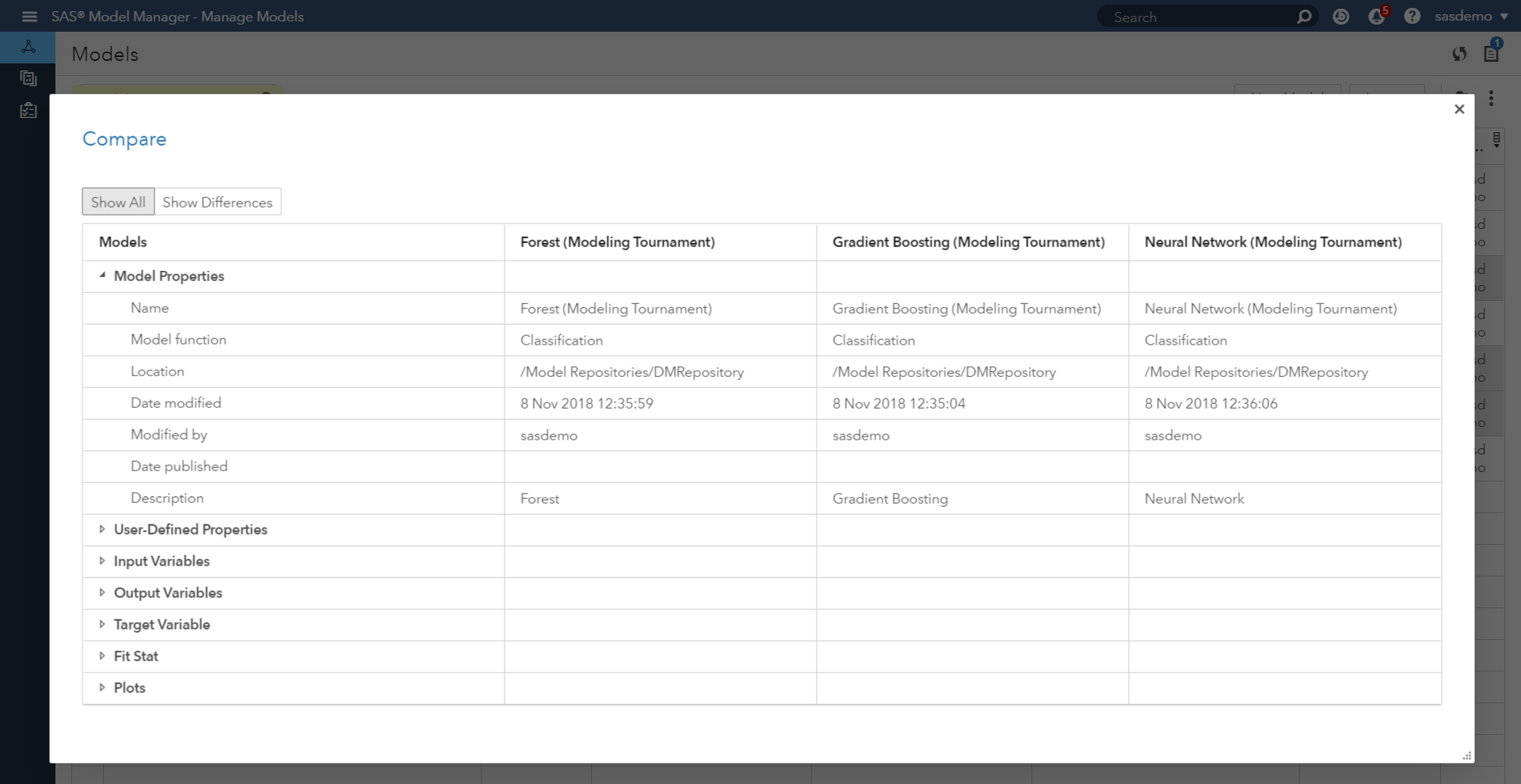

As displayed in Figure 4, users can assess and compare across models. When comparing models, the model comparison output includes model properties, user-defined properties, variables, fit statistics, and plots for the models.

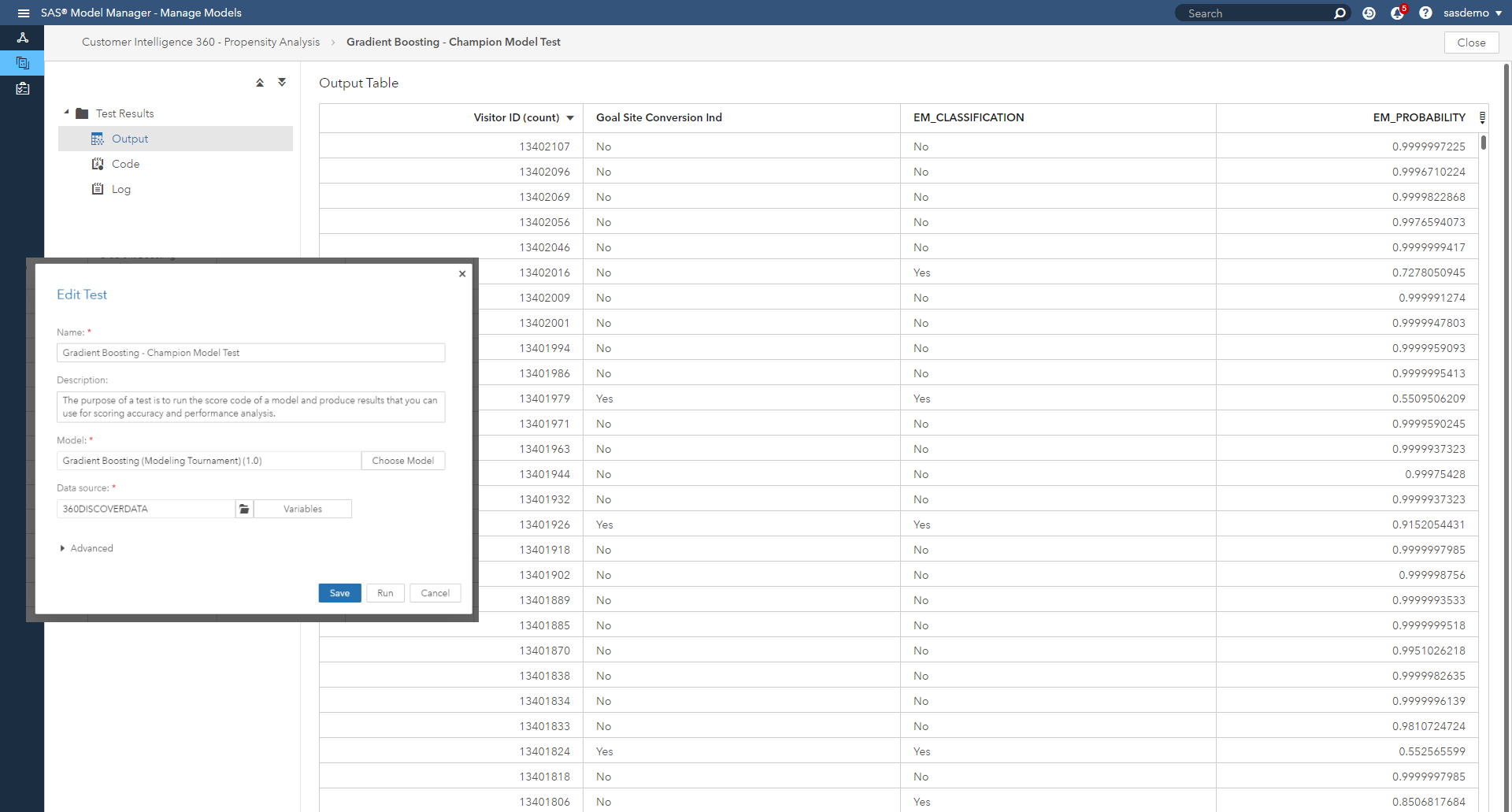

Before a new champion model is published for production deployment, an organization might want to test the model for operational errors. This type of pre-deployment check is important especially when the model is to be deployed in real-time scoring use cases. The purpose of a test is to run the score code of a model and produce results that can be used for scoring accuracy and performance analysis.

Figure 5 showcases a snapshot of the champion model’s scoring logic successfully assigning propensities to sas.com web visitors, as well as predicted classifications of yes/no on whether the prospect is likely to meet the defined conversion goal.

When a champion model is ready for production scoring, users set the model as the champion. The project version that contains the champion model becomes the champion version for the project. Users can leverage challenger models to test the strength of champion models over time.

To ensure that a champion model in a production environment is performing efficiently, users can collect performance data that has been created by the model at intervals that are determined by your brand. Performance data is used to assess model prediction accuracy. For example, users might want to assess performance weekly, monthly or quarterly. Monitoring can be performed on champion and challenger models, and as data trends change over time, the champion model can be improved by:

- Replacement by a challenger (another algorithm within the project starts fitting the data more accurately).

- Tuning or refitting the model performed by an analyst.

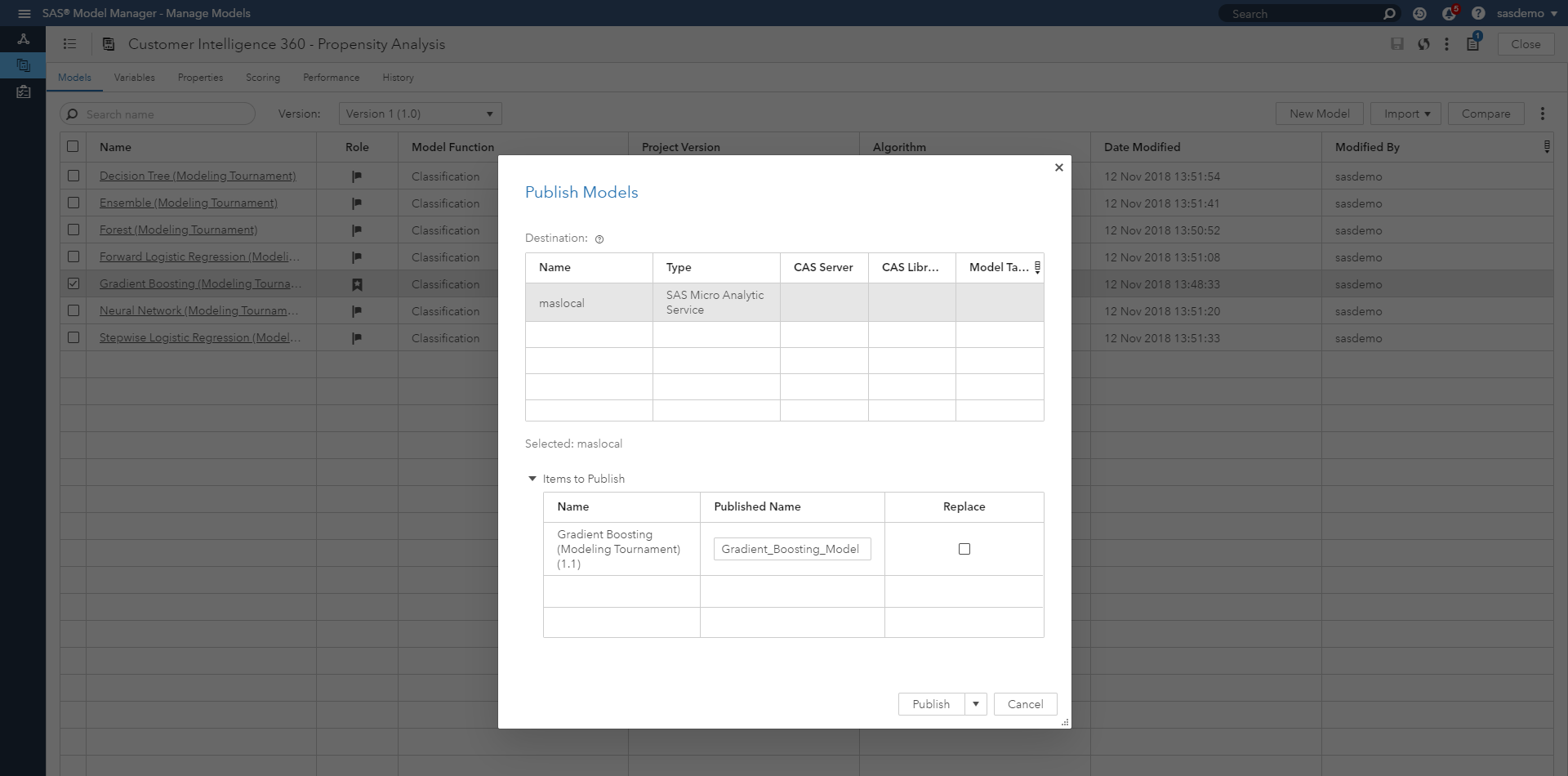

Users can publish models, so that it can be used by other applications for tasks such as predictive scoring. Models can be published to destinations that are defined for CAS, Hadoop, SAS Micro Analytic Service, and defined databases.

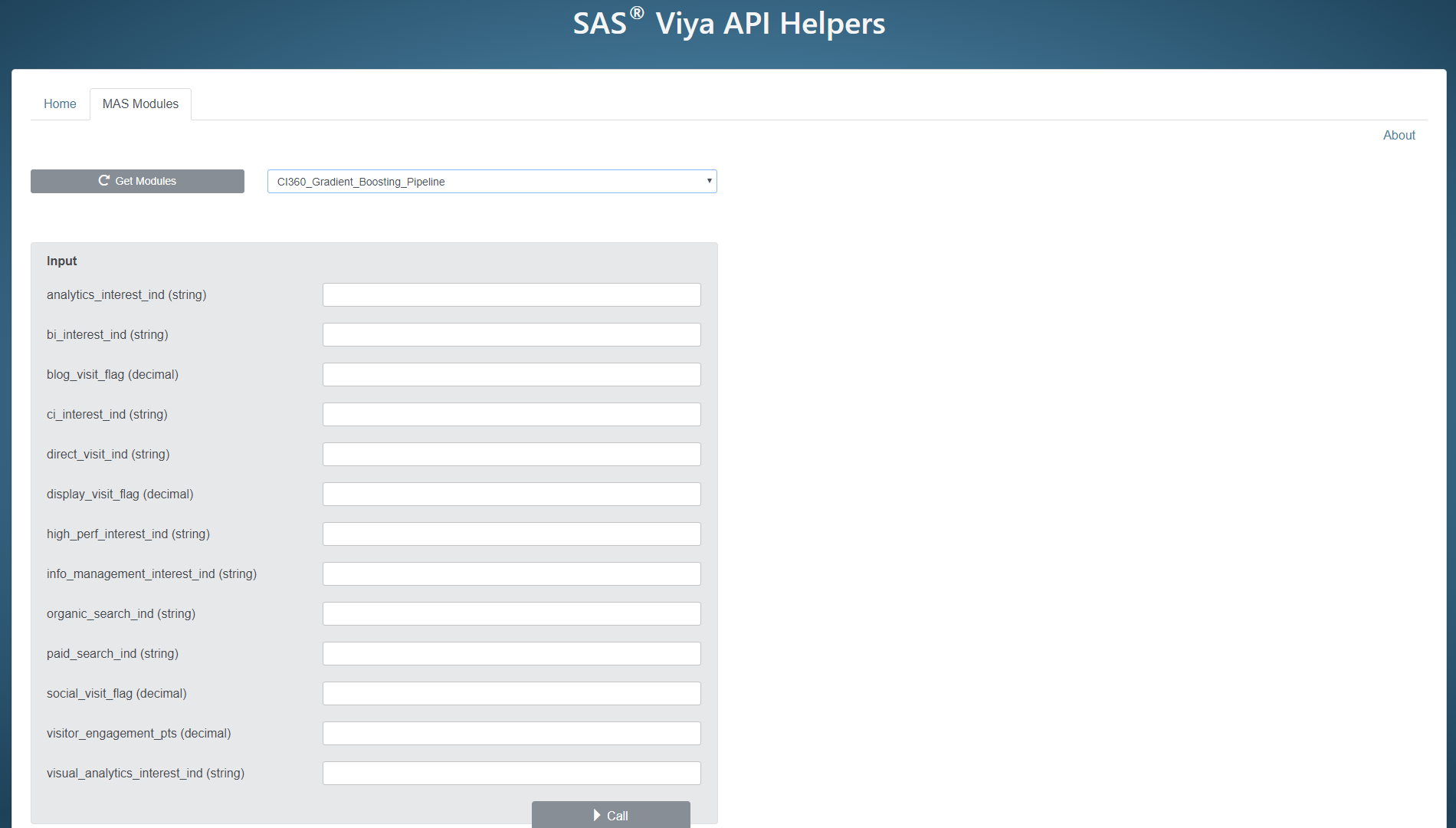

Specific to analytically-charged digital marketing, the SAS Micro Analytic Service is a powerful mechanism. For example, it can be called as a web application with a REST interface by SAS and other client applications. Envision a scenario where a visitor clicks on your website or mobile app, meets an event definition, and a machine learning model runs to provide a fresh propensity score to personalize the next page of that digital experience. The REST interface (known as the SAS micro analytic score service) provides easy integration with client applications, and adds persistence and clustering for scalability and high availability. For more technical details on using the SAS micro analytic score service, check this out.

To bring this to life, let’s demonstrate how it works.

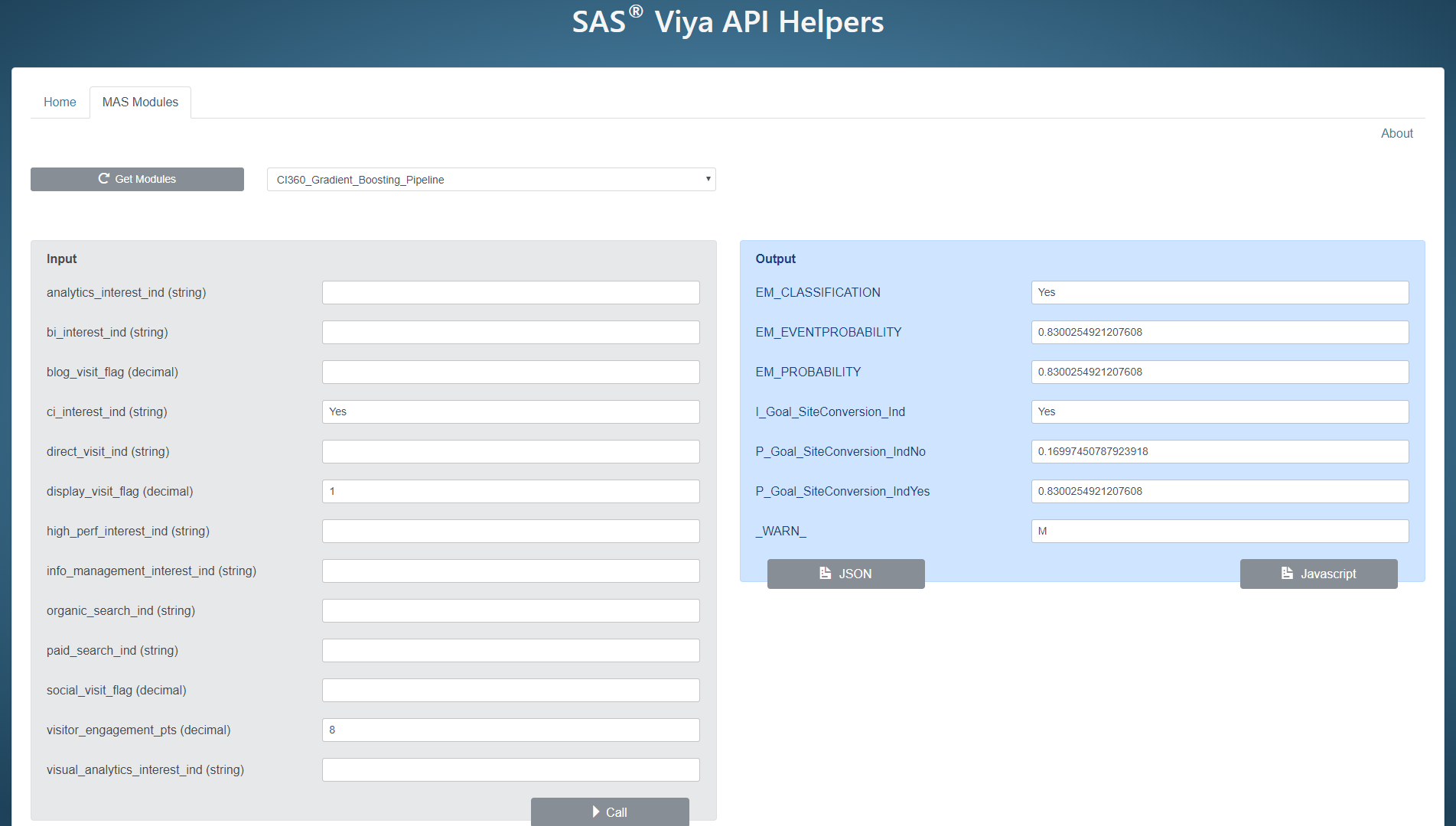

The figure above provides a non-technical method to show how SAS Customer Intelligence 360 can call the champion gradient boosting model to run. Input parameter values can be inserted to simulate different scenarios. For this example, I will provide values for:

- Visitor engagement score: 8.

- Viewing of the Customer Intelligence web page: Yes.

- Display media interactions: 1 or more.

These values represent visitor behaviors to sas.com that can be used for scoring.

Figure 8 shares what occurs after the model is called with those specific input values. For a visitor to sas.com with those specific data points, the model predicts this visitor will convert with a probability of 83 percent. As you can see, I can run other simulations to assess how other visitors will behave, as well as confirm that my model can successfully produce the actionable scoring when called.

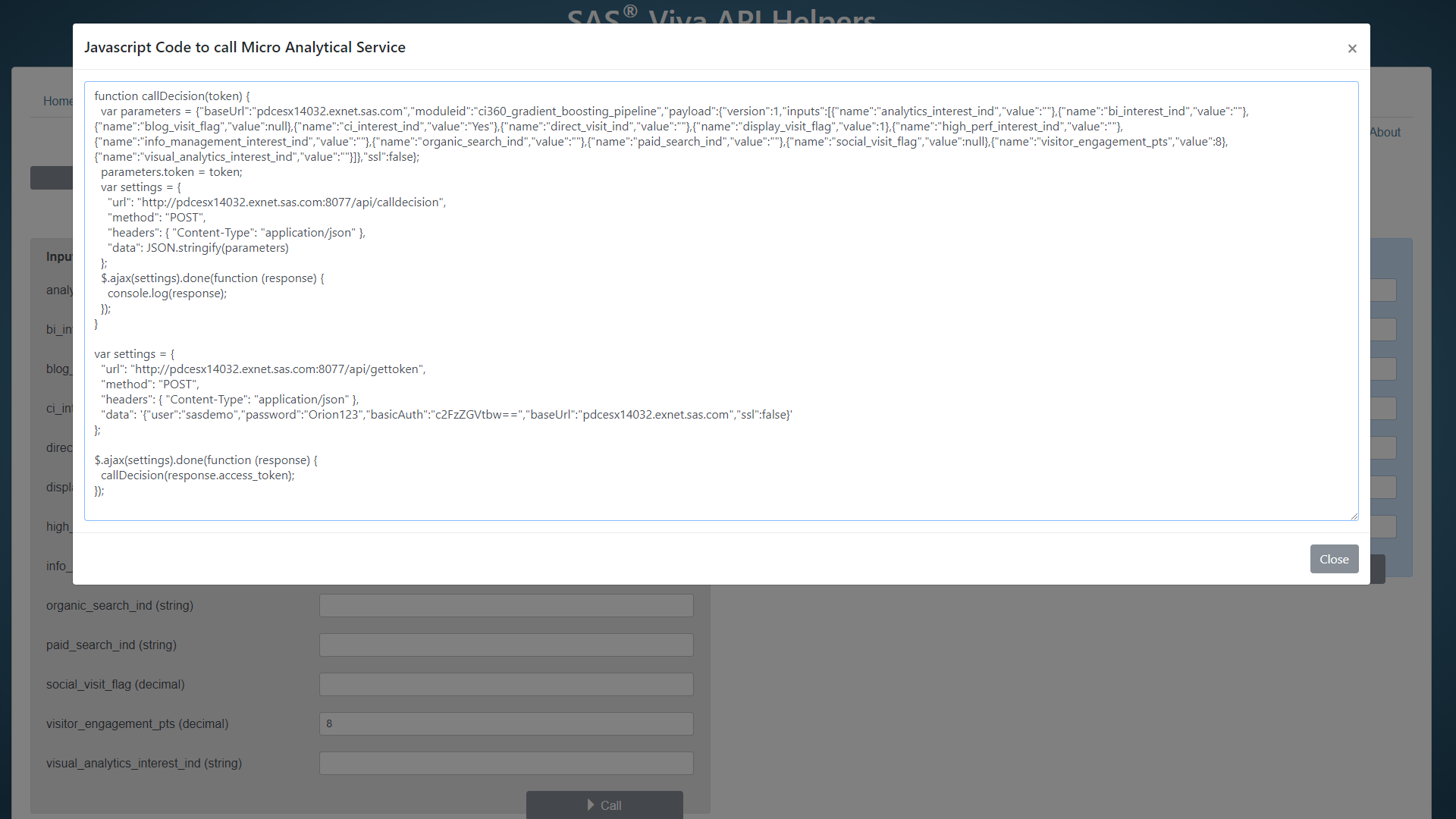

For technical readers who want to view the program that calls the model, here is a JavaScript view.

SAS Customer Intelligence 360 enables brands to use first-party data to make better decisions using predictive analytics and machine learning in conjunction with business rules across a hub of channel touch points. As your journey into analytical marketing use cases progresses, usage of your modeling intellectual property cannot be under-exploited. It’s competitive differentiation awaiting to be deployed.

1 Comment

Thanks Suneel, and I appreciate that your post is informative, and easy to follow.