This is the sixth post in my series of machine learning best practices. If you've come across the series for the first time, you can go back to the beginning or read the whole series.

Aristotle was likely one of the first data scientists who studied empiricism by learning through observation. Like most data scientists, he saw how phenomena change over time. That is, the statistical properties of a model change over time, often through a process known as concept drift.

I also adhere to the change-anything-changes-everything approach, acknowledging that changes in one feature or adding a new feature can cause your entire model to change. The features (characteristics and attributes) also can change.

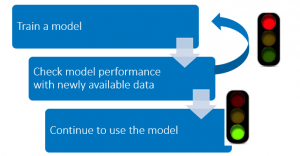

Here are some tricks I use to compensate for the temporal effect:

- Calculate population stability indexes and characteristic monitoring statistics to measure model decay at frequent intervals.

- Monitor the ROC and lift for classification models.

- Set up monitoring jobs to detect model decay, if you have put enough rigor into putting your model to work.

- Schedule re-training jobs at very specific intervals.

- Survival analyses that incorporates the timing elements to predict when an event will happen is not used enough and goes beyond point predictions. I specifically recommend survival data mining using discrete-time logistic models.

- Recurrent networks are also very good for modeling sequence data.

How do you manage change in your modeling efforts? Let me know by leaving a comment.

My next post will be about generalization. To read all the posts in this series, click the image below.