The SAS In-Database Embedded Process is the key technology which enables the SAS® Scoring Accelerator, SAS® Code Accelerator, and SAS® Data Quality Accelerator products to function. The EP is the computation engine we place near the data, which reduces unnecessary data movement and speeds up processing and efficiency. The EP also allows products such as the SAS® Data Loader for Hadoop to work remotely with data and it provides the ability to read and write multiple streams of data concurrently.

The EP’s capabilities as part of SAS In-Database technology can vary from one supported data provider to another – as documented here. For this post, we’ll look closely at the SAS Embedded Process for Hadoop.

Alongside the release of SAS 9.4 Maintenance 3 in the summer of 2015, the EP was upgraded with new capabilities as well. The EP now brings more computation to the data by introducing support for new data providers and more analytic capabilities, all of which is delivered through improved installation procedures and running with a new execution architecture. Let’s take a closer look.

What new things can the EP do?

Some significant enhancements were added to EP with SAS 9.4M3:

- Code Accelerator supports:

- a SET statement with embedded SQL

- a SET statement that specifies multiple input tables

- the MERGE statement

- reading and writing of HDFS-SPD Engine file formats

- Scoring Accelerator supports:

- model scoring using analytic stores

- the SAS_HADOOP_CONFIG_PATH environment variable for use with run and publish model macros (eliminates the need for a merged configuration file!)

These changes allow the EP to provide more functionality while also simplifying its operation. Nice!

Additional details on what’s new can be found in the SAS® 9.4 In-Database Products: User’s Guide, Sixth Edition document.

What new providers does the EP work with?

The previous version of the EP supported the following Hadoop data providers:

- Cloudera

- Hortonworks

And now the EP also supports the following Hadoop distributions:

- MapR

- IBM BigInsights

- Pivotal HD

SAS tracks which Hadoop distributions are supported in various scenarios.

Because most other Hadoop distributions are built using the open-source Apache Hadoop codebase and/or support the common Hadoop APIs, then it’s possible our SAS technology will work with them, too. SAS therefore has a support statement about alternative Hadoop distributions which explains how we manage that support.

How do the new deployment methods work?

Installing the EP has been improved in a couple of ways.

- The original command-line installation process has been simplified

- Some tasks have been consolidated to eliminate a few steps

- JAR files can be kept local to the EP's directory structure

- SAS Deployment Manager can create a parcel or stack

- Use Cloudera Manager to deploy the parcel to the cluster

- Use Ambari to deploy the stack to Hortonworks

The command-line technique is the most flexible and can be used for all supported Hadoop distributions. The command-line technique must be used for MapR, IBM BigInsights, and Pivotal HD as well as older and alternative Hadoop distributions.

The parcel/stack technique, on the other hand, provides a familiar deployment option for folks already comfortable using those third-party tools.

Additional details on deployment options are described in the SAS(R) 9.4 In-Database Products: Administrator’s Guide, Sixth Edition document.

How does the EP run now?

If you’ve worked with the EP for Hadoop before, then you probably are familiar with its operation. In particular, the older version of the EP was deployed to each Data Node host of the Hadoop cluster and ran as an OS-level service. This means it was defined in the init.d system to automatically run as a service. A system administrator could use the service command to start, stop, and check the status of the EP processes individually on each host.

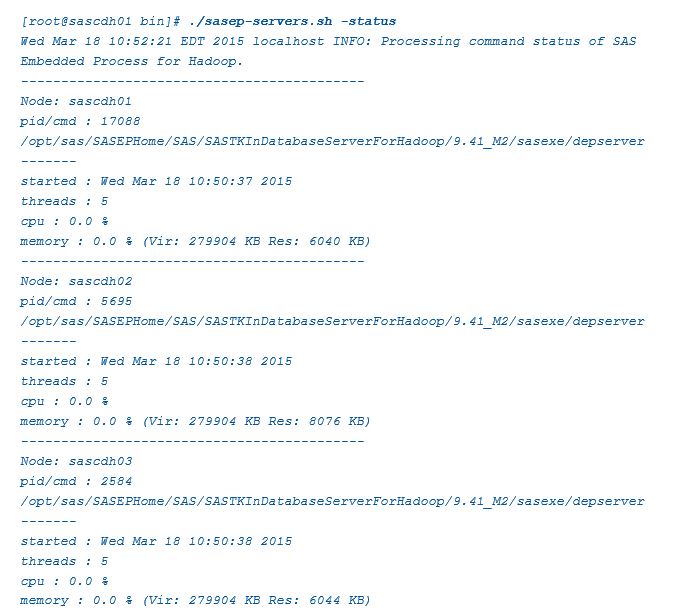

Here’s what the old EP status check looked like in a small, 3-node Hadoop cluster:

Note that the OS-level process id (pid) is listed along with the command executed on each host. Each of these processes are independently controlled outside of MapReduce. If any one of these processes is terminated without also disabling the associated MapReduce node, then any jobs which rely on the EP will fail.

With this new release of the EP, there is no longer any OS-level service component. The EP runs purely as MapReduce jobs on the Hadoop cluster, instantiating on demand, no longer as persistent services. It is still necessary for the EP to have its requisite JAR files deployed to each of the Data Node hosts, but there are no longer any long-running EP processes sitting idle when no real work is going on.

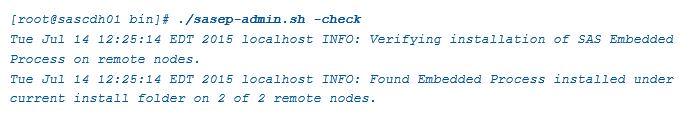

And here’s what the new EP status check looks like on the same small, 3-node Hadoop cluster:

Note that for the new EP, this status check simply confirms if the correct JARs are available on the remote hosts (and assumes the local host which is performing this check already has those same JARs). This new approach to EP execution is less likely to be impacted by the inadvertent termination of one or more OS-level services as represented in the old EP status check above.

See the SASEP-ADMIN.SH Script section of the SAS® 9.4 In-Database Products: Administrator’s Guide, Sixth Edition document for an explanation on managing the EP using the script parameters.

What backward compatibilities does the EP provide?

The EP for Hadoop released along with SAS 9.4 M3 is backward-compatible in a several ways. Without diving into all possible software versioning variations and assuming proper qualifications, the new EP can be deployed independently and will work with:

- SAS 9.3 and later, including:

- LASR and High-Performance Analytics software

- SAS code written and tested before the 9.4 M3 release of SAS

- MapReduce1 (as associated with older SAS-supported Hadoop distributions)

This means that, assuming all other deployed software is at minimum version levels, the EP for Hadoop software can be upgraded first and independently from everything else so your customer can have many of the advantages (but not all!) this new release offers without impacting any other aspects of the software deployment.

Of course, some of the advantages offered along with EP necessitate upgrading other components, too. A simple example is eliminating the merged configuration file and relying on the SAS_HADOOP_CONFIG_FILES directive instead. That’s really on the SAS environment side of things – and so SAS 9.4 must be upgraded to M3 to take advantage of that. The flip side is that if you also upgrade to SAS 9.4 M3, but do not change any saved program files which specify a merged configuration file, then they will continue to run okay assuming you’ve updated that merged configuration file with the information needed to contact the new EP (if anything changed).

See Backward Compatibility and the Upgrading from or Reinstalling a Previous Version sections of the SAS® 9.4 In-Database Products: Administrator’s Guide, Sixth Edition document for more information.

7 Comments

It would be nice to see an updated version of this for recent releases.

Here is also the links to the backwards compatibility for the EP in newer guide if anyone is looking for later releases than M6. Ninth edition documentation will be updated soon with the information as well.

SAS® 9.4 In-Database Products: Administrator’s Guide, Eighth Edition

https://go.documentation.sas.com/?docsetId=indbag&docsetTarget=n104dbte7mppc0n1preqbdrvzgr8.htm&docsetVersion=9.4&locale=en

SAS® 9.4 In-Database Products: Administrator’s Guide, Ninth Edition (Backwards Compatibility section coming soon for M6 administration guide)

https://go.documentation.sas.com/?docsetId=indbag&docsetTarget=p1qt68x0lce2lmn19wpenhp6ibsb.htm&docsetVersion=9.4_01&locale=en

(when it is available the ninth edition should have a separate section for backwards compatibility that should be available soon in the left section of the link above.)

Hi, for installing the SASEP on an already installed Cloudera cluster, it's just needed to correctly install the parcel? Thanks in advance

Juan,

If your Cloudera Distribution Including Apache Hadoop (CDH) cluster is already up & running, then yes, you can deploy the SAS Embedded Process as a Cloudera Parcel. I recommend referring to the latest documentation, SAS® 9.4 In-Database Products: Administrator’s Guide, Eighth Edition.

Be sure to verify the pre-requisites for the deployment - not just software version numbers, but also that you have the expected and necessary SAS 9.4 software licensed and installed as well (notably SAS/ACCESS Interface to Hadoop along with the required Hadoop client JARs and config XML files).

And when you're ready to proceed, then follow the instructions in the Admin Guide > Part 2: Administrator’s Guide for Hadoop > Deploying the In-Database Deployment Package Using the SAS Deployment Manager.

Alternatively, manual deployment of the SAS Embedded Process is supported for all deployment scenarios - and if the Cloudera Parcel approach doesn't work for some reason at your site, then manual deployment may be good for you.

Hope this helps,

Rob

Is there a way to set a specific Yarn Queue for Embedded Process Mapreduce jobs? Maybe in ep-config.xml on HDFS?

Lorenzo,

James Kochuba presented an excellent SGF 2016 paper on SAS resource management with YARN: Best Practices for Resource Management in Hadoop.

Besides the SAS Embedded Process, James covers a variety of SAS technologies along with Hadoop-specific considerations to enable you to build a comprehensive strategy for managing resources.

HTH,

Rob

Yes - more info is available in the SAS documentation, SAS/ACCESS® 9.4 for Relational Databases: Reference, Seventh Edition:

> Passing Functions to the DBMS Using PROC SQL

> Passing SAS Functions to Hadoop

> SQL Pass-Through Facility Specifics for Hadoop

Cheers!

Is this a correct statement ?

"Without SAS EP installed on the hadoop cluster, only SAS code that has explicit Hive SQL pass through queries and limited set of BASE SAS procedures (FREQ, MEANS etc.) will run entirely on the hadoop cluster, and any traditional DATA step program (including DS2) written will involve back and forth between SAS and Hadoop, same as any other RDBMS configured for SAS/ACCESS.