Staying competitive in a big data world means working fast and making decisions even faster. You need to assess conditions, approve access, stop transactions and reroute activities quickly so you can seize opportunities or prevent problems.

With increasing data volumes from the Internet of Things (Cisco predicts that fifty billion devices will be connected to the Internet by 2020), how can organizations consume and gain insights from these growing data streams? Event stream processing (ESP) has been positioned to address this challenge, yet how do you know if you really need it?

Let’s begin by defining what event stream processing is. According to Wikipedia, event stream processing is a set of technologies designed to assist the construction of event-driven information systems. ESP deals with the task of processing streams of event data with the goal of identifying the meaningful patterns within those streams, employing techniques such as detection of relationships between multiple events, event correlation, event hierarchies and other aspects such as causality, membership and timing.

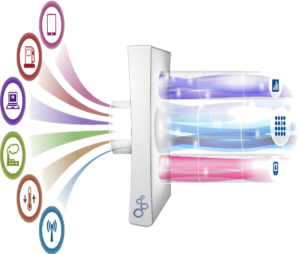

That’s great, but what does it really mean? In simple terms, ESP allows organizations to continuously analyze data … updating intelligence as new events occur … and helps drive business action in real time. The key takeaway is the word “continuous.” ESP allows data to be processed without the traditional information lag that most analytical systems suffer from. Instead of storing data and running queries against it, ESP stores the queries and streams data through them with low latency.

ESP has been available in the market for years, but mainstream adoption has been slow due to organizational uncertainty around how to apply it … or if it should be applied at all. Unfortunately, there is no “standard” situation where ESP fits and where it doesn’t, and some use case scenarios for ESP are quite complex. However, two common scenarios where ESP should definitely be considered are:

- Real-time alerting.

- Data filtering or data aggregation.

If you have a use case that requires immediate notification of events to prevent or minimize downstream business impact, event stream processing is a great candidate to support the continuous analysis and alerting requirements. Since the data is processed in motion, improved notification times can be achieved, which can be critical for optimizing corrective action strategies. Event stream processing is optimized to execute incremental analytics, including threshold rules, correlations, pattern detection and general computation. If more advanced statistical models that depend on extended historical data are required, those likely would be pushed downstream into a batch execution model as part of a multi-phase analytical solution. But again … it depends!

Another area where ESP is gaining traction is for data filtering and aggregation. As discussed earlier, organizations are facing increasing collection and storage demands as part of the growth of the Industrial Internet. In order to effectively manage the volume of their inbound data streams, ESP can be applied to intelligently down-select data based on event triggers or through various summarization techniques. This approach helps minimize loading on analytical systems to control rising storage and infrastructure costs.

At the end of the day, every customer situation will vary, so clear understanding of use cases and value propositions need to be evaluated. There are times when ESP can drive tremendous value, but it should be balanced with traditional processing systems to ensure the expected results are achieved. Real time execution should be thought of in terms of what is the “right time” execution for the problem at hand. If the current systems/infrastructure can’t support emerging needs, there is perceived deficiency in response time and efficiency or data growth is outpacing organizational capability, event stream processing may be the technology that you never knew you needed!

3 Comments

Nice job on this article Bryan! You nailed it.

This is a very clear and concise explanation of why and how ESP can provide value in our expanding data world.

Hello, fantastic information and an interesting article,

it is going to be fascinating if this is still

the state of affairs in a few months time