Imagine you’re a hungry black bear and you’re standing in a fast-moving river up in the Rocky Mountains. You peer down into the water as it swirls and bubbles past your legs. You know there are fish in there somewhere, and over the years you have seen all kinds of different ones, big ones, small ones, fast ones, slow ones, brown ones, shiny ones, green, blue and yellow ones. There are probably some passing you right now, but you won’t be able to see them unless you hold your breath and stick your head in the stream.

Under the water, there is a fog of small stones and plant debris streaming past, but you’re not interested. You are only looking for a specific pattern or shape because you know that’s what lunch looks like.

Under the water, there is a fog of small stones and plant debris streaming past, but you’re not interested. You are only looking for a specific pattern or shape because you know that’s what lunch looks like.

Now imagine you are the CIO of a traditional retail business, looking to see what might be the best way to steal a march on your competitors. They, like you, have lots of back office capabilities to analyse the historical shopping habits of their customers. This information is used to help communicate with them better but there is only so much you can do with that, especially because your customer focus area this week is the tech dependent ‘Gen Z’.

Since you also have two teenagers yourself, you know they all consume and share information in huge networks using tools like Snapchat, Twitter and FB. You also know that they don’t really use email, have never read any mail received through a physical letterbox, and spend most of their time watching YouTube and Netflix rather than television. They inhabit a world of very fast-moving, streaming information, where everything happens in the moment and anything more than a few hours old is of no value to them.

As that CIO, you realise you are just like the bear standing in the fast-moving stream. Unlike the bear, however, you cannot see anything that is streaming by because you don’t have the tools to stick your head in the stream. You have no capability that allows you to search for the fish and even if you did, you have no way to analyse and act on what you see in that moment.

A model for artificial intelligence

It is estimated that the human eye can transmit roughly 10 mbits (about the same as an entry level Ethernet card) of information per second to the brain. The brain takes just 13 milliseconds to interpret what it sees in a single image.

That’s just one of our five senses.

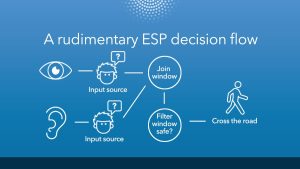

Through each sense, we receive a continuous ‘stream’ of real-time information from sensors and input points (ears, eyes, skin and so on). We use a combination of the information streamed to us from these sensors and our previous experiences to make real-time decisions automatically, throughout any given day.

What’s more, we learn. We merge the data from our senses with what we already know, and draw inferences to enable us to make decisions even in new situations. We are, in fact, a feat of amazing engineering. In very simplistic terms, what humans do to process this streaming information can be considered as a kind of continuous decision flow.

This is, of course, why humans are the model for artificial intelligence (AI).

It makes perfect sense that we would want a technology (or combination of technologies) that can mimic the same capabilities to take care of repetitive tasks of varying complexity.

This is exactly what SAS provides with our streaming analytics capability called Event Stream Processing and our next generation, high performance analytics platform framework called SAS Viya.

Available solution

If we look at the above ESP project, each input is a data source for streaming analytics and each join is a window or view onto the data at that point. Remember the bear standing in the stream? We can consider the fish as events (messages or pieces of information) and the bear’s eyes as the window looking for events of a certain type. In this analogy, then, the events themselves are really just the streaming messages being passed around by applications and people on the internet, including IoT-enabled devices.

Now imagine that instead of just a bear (or window) looking for a specific type, size or colour of fish (or event), we could take advanced analytical models and put them inside the windows. We could even create windows that can run algorithms that learn from the patterns of data in the windows. Not only could we start to pass the streaming data though analytical models in real-time, but we can also, automatically, learn new things about the data with machine learning. Well, there is no need to imagine what you could do with a capability like that any longer, because it is now available as part of the SAS platform in “SAS ESP for Analytics”.

Streaming analytics

Streaming analytics is, at its core, a very simple concept. It only becomes complicated because of the amount and variety of information that is streaming around at any time, and the vast number of decisions that this could allow you to automate.

One way to handle a large thing (like big, streaming data) is to break it up into smaller things, which is what “SAS ESP for Edge Computing” allows. Instead of looking at everything at once with a single decision flow, why not have lots of specialised decision flows looking for specific things, all closer to the sources of the data, and then aggregate the information? Hang on, you say, doesn’t that mean I also need tools to manage and deploy all the decision flows to and from the devices at the edge and the machines in the data centre? Yes, but that’s what SAS Event Stream Manager does.

Analytical modelling techniques may need access to all the data, including historical, to build them correctly. “SAS ESP for CAS” allows ESP to work efficiently with high performance, in-memory, distributed data. It is held in SAS Viya to get superfast analytics based answers from CAS, and can be used in the streaming decision flow if needed.

All these capabilities can be used in combination or separately with the core ESP engine. This is why SAS has created a new pricing model for its streaming technology. Each of the ESP products is licensed separately, depending on the needs of the project and based on event consumption because the challenge is no longer so much CPU and processors, but how many events you need to observe.

In the next article, we will explore the pricing method further and examine some specific industry examples as to why it is needed.

Summing Up

Let’s revisit the human eye for a moment. Of the 10 mbits per second it can ‘stream’, the brain can only be consciously aware of around 100 bits per second of the information. This is very important to note, because one of the drivers behind AI is to see if we can use technology, software and analytics to improve on naturally evolved systems. Of course, we can already use our streaming analytics to examine all the data it subscribes to, all the time, if we want.

So does streaming analytics mean we can build artificial Intelligence that is smarter than the average bear? Maybe not quite yet, but only because the systems haven’t yet received and assessed enough streaming data. The bear can have received data throughout a lifetime or though evolved genetic memory. But given enough time to monitor, learn and take action from the right data sources continuously, yes, it’s certainly possible one day.