Let's continue now to Nikolaos Kourentzes' blog post on How to choose a forecast for your time series.

Using a Validation Sample

Nikos first discusses the fairly common approach of using a validation or "hold out" sample.

The idea is to build your model based on a subset of the historical data (the "fitting" set), and then test its forecasting performance over the historical data that has been held out (the "validation" set). For example, if you have four years of monthly sales data, you could build models using the oldest 36 months, and then test their performance over the most recent 12 months.

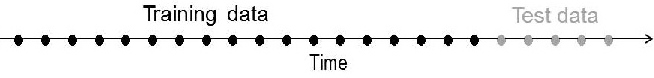

You might recognize this approach from the recent BFD blog Rob Hyndman on measuring forecast accuracy. Hyndman uses the terminology (which may be more familiar to data miners) of "training data" and "test data." He suggested that when there is enough history, about 20% of the observations (the most recent history) should be held out for the test data. The test data should be at least as large as the forecasting horizon (so hold out 12 months if you need to forecast one year into the future).

Hyndman uses this diagram to show the history divided into training and test data. The unknown future (that we are trying to forecast) is to the right of the arrow:

Nikos works though a good example of this approach comparing an exponential smoothing and an ARIMA model. Each model is built using only the "fitting" set (the oldest history), and generates forecasts for the time periods covered in the validation set.

Nikos works though a good example of this approach comparing an exponential smoothing and an ARIMA model. Each model is built using only the "fitting" set (the oldest history), and generates forecasts for the time periods covered in the validation set.

How accurately the competing models forecast the validation set can help you decide which type of model is more appropriate for the time series. You could then use the "winning" model to forecast the unknown future periods. An obvious drawback is that your forecasting model has only used the older fitting data, essentially sacrificing the more recent data in the validation set.

Another alternative, once you've use the above approach to determine which type of model is best, is to rebuild the same type of model based on the full history (fitting + validation sets).

Using Information Criteria

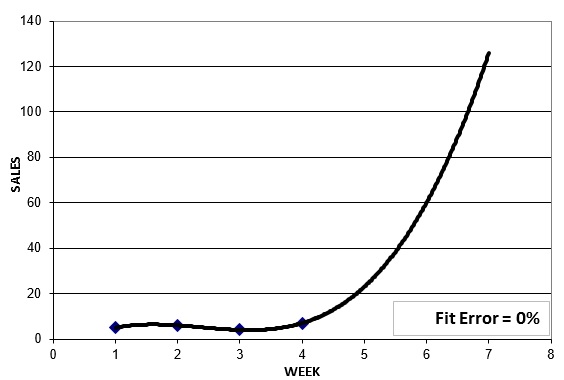

Information criteria (IC) provide an entirely different way of evaluating model performance. It is well recognized that more complex models can be constructed to fit the history better. In fact, it is always possible to create a model that fits a time series perfectly. But our job as forecasters isn't to fit models to history -- it is to generate reasonably good forecasts of the future.

As we saw in the previous BFD post, overly complex models may "overfit" the history, and actually generate very inappropriate forecasts.

Measures like Akaike's Information Criterion (AIC) help us avoid overfitting by balancing goodness of fit with model complexity (penalizing more complex models). Nikos provides a thorough example showing how the AIC works. As he points out, "The model with the smallest AIC will be the model that fits best to the data, with the least complexity and therefore less chance of overfitting."

Another benefit of the AIC is that it uses the full time series history, there is no need for separate fitting and validation sets. But a drawback is that AIC cannot be used to compare models from different model families (so you could not do the exponential smoothing vs. ARIMA comparison shown above). There is plenty of literature on the AIC so you can find more details before employing it.

Combining Forecasts

Nikos ends his post with a great piece of advice on combining models. Instead of struggling to pick a single best model for a given time series, why not just take an average of several "appropriate" models, and use that as your forecast?

There is growing evidence that combining forecasts can be effective at reducing forecast errors, while being less sensitive to the limitations of a single model. SAS® Forecast Server is one of the few commercial packages that readily allows you to combine forecasts from multiple models.