As customer interactions spread across multiple touch points, consumers demand seamless and relevant experiences. Traditional planning and design approaches--that rely on historical conventions, myopic single-channel perspectives and sequential act-and-learn iteration—no longer matches the complexity and pace of modern digital marketing.

Marketers must re-evaluate their strategies for engagement—especially in the area of testing. Testing and measurement is a core requirement for effectively optimizing digital marketing activities and its accompanying conversion rates.

SAS Customer Intelligence 360 Engage was released last year to address our client needs for a variety of modern marketing challenges. Part of the software's capabilities revolve around:

Regardless of the method, testing is attractive because it is efficient, measurable and serves as a machete cutting through the noise and assumptions associated with delivering effective experiences. The question is: How does a marketer know what to test?

There are endless possibilities. Ideas flow out of brainstorming meetings, bright minds flourish with motivation and campaign concepts are born. As a data and analytics geek, I've worked with ad agencies and client-side marketing teams to help connect dots between the world of predictive analytics (including machine learning, more recently) and the creative process.

Take a moment to reflect on the concept of ideation. Is it feasible to have too many ideas to practically try them all? How do you prioritize? Wouldn't it be awesome if a statistical model could help?

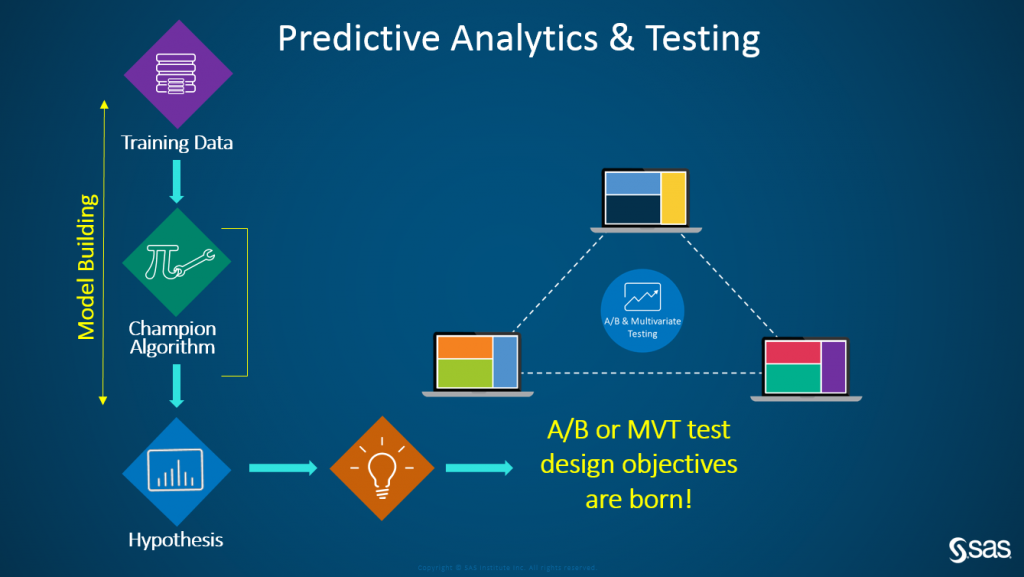

Let's break this down:

- Predictive analytic or machine learning projects always begin with data. Specifically training data which is fed to algorithms to address an important business question.

- Ultimately, at the end of this exercise, a recommendation can be made prescriptively to a marketer to take action. This is what we refer to as a hypothesis. It is ready to be tested in-market.

- This is the connection point between analytics and testing. Just because a statistical model informs us to do something slightly different, it still needs to be tested before we can celebrate.

Here is the really sweet part. The space of visual analytics has matured dramatically. Creative minds dreaming of the next digital experience cannot be held back by hard-to-understand statistical greek.

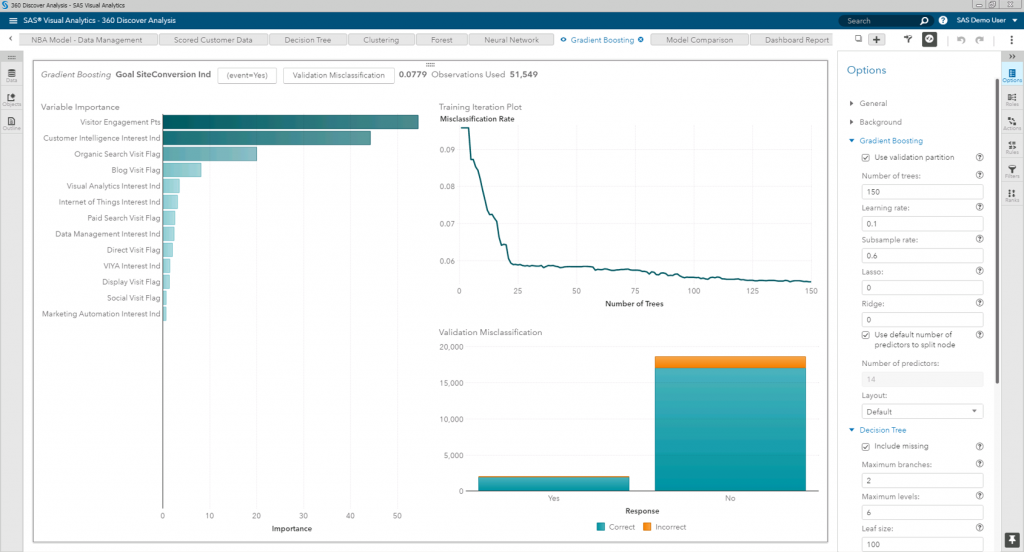

Want a simple example? Of course you do. I'm sitting in a meeting with a bunch of creatives. They are debating on which pages should they run optimization tests on their website. Should it be on one of the top 10 most visited pages? That's an easy web analytic report to run. However, are those the 10 most important pages with respect to a conversion goal? That's where the analyst can step up and help. Here's a snapshot of a gradient boosting machine learning model I built in a few clicks with SAS Visual Data Mining and Machine Learning leveraging sas.com website data collected by SAS Customer Intelligence 360 Discover on what drives conversions.

I know what you're thinking. Cool data viz picture. So what? Take a closer look at this...

The model prioritizes what is important. This is critical, as I have transparently highlighted (with statistical vigor I might add) that site visitor interest in our SAS Customer Intelligence product page is popping as an important predictor in what drives conversions. Now what?

The creative masterminds and I agree we should test various ideas on how to optimize the performance of this important web page. A/B test? Multivariate test? As my SAS colleague Malcolm Lightbody stated:

"Multivariate testing is the way to go when you want to understand how multiple web page elements interact with each other to influence goal conversion rate. A web page is a complex assortment of content and it is intuitive to expect that the whole is greater than the sum of the parts. So, why is MVT less prominent in the web marketer’s toolkit?

One major reason – cost. In terms of traffic and opportunity cost, there is a combinatoric explosion in unique versions of a page as the number of elements and their associated levels increase. For example, a page with four content spots, each of which have four possible creatives, leads to a total of 256 distinct versions of that page to test.

If you want to be confident in the test results, then you need each combination, or variant, to be shown to a reasonable sample size of visitors. In this case, assume this to be 10,000 visitors per variant, leading to 2.56 million visitors for the entire test. That might take 100 or more days on a reasonably busy site. But by that time, not only will the marketer have lost interest – the test results will likely be irrelevant."

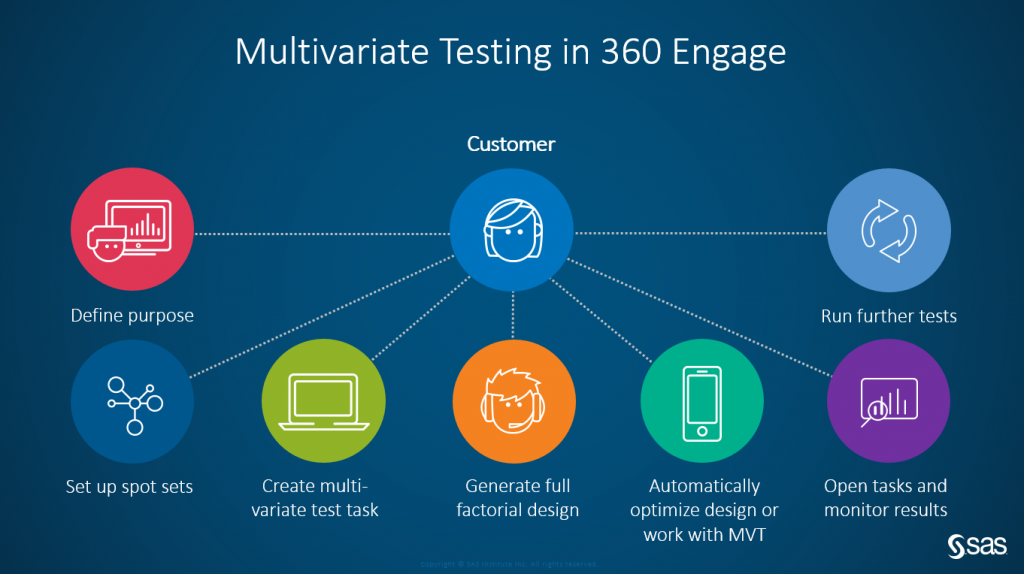

SAS Customer Intelligence 360 provides a business-user interface which allows the user to:

- Set up a multivariate test.

- Define exclusion and inclusion rules for specific variants.

- Optimize the design.

- Place it into production.

- Examine the results and take action.

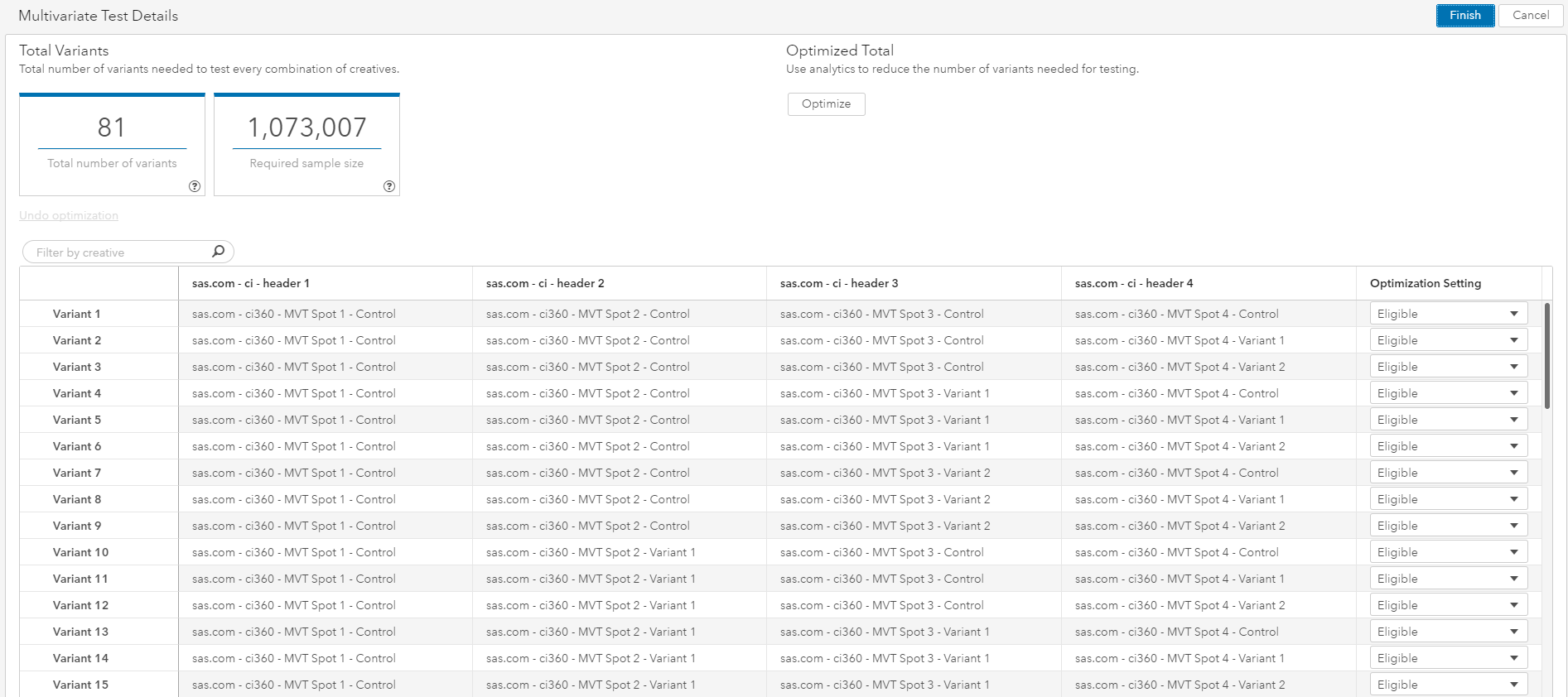

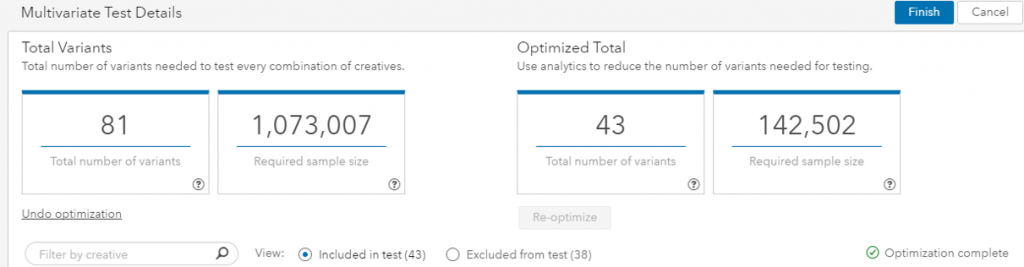

Continuing with my story, we decide to set up a test on the sas.com customer intelligence product page with four content spots, and three creatives per spot. This results in 81 total variants and an estimated sample size of 1,073,000 visits to get a significant read at a 90 percent confidence level.

Notice that Optimize button in the image? Let's talk about the amazing special sauce beneath it. Methodical experimentation has many applications for efficient and effective information gathering. To reveal or model relationships between an input, or factor, and an output, or response, the best approach is to deliberately change the former and see whether the latter changes, too. Actively manipulating factors according to a pre-specified design is the best way to gain useful, new understanding.

However, whenever there is more than one factor – that is, in almost all real-world situations – a design that changes just one factor at a time is inefficient. To properly uncover how factors jointly affect the response, marketers have numerous flavors of multivariate test designs to consider. Factorial experimental designs are more common, such as full factorial, fractional factorial, and mixed-level factorial. The challenge here is each method has strict requirements.

This leads to designs that, for example, are not orthogonal or that have irregular design spaces. Over a number of years SAS has developed a solution to this problem. This is contained within the OPTEX procedure, and allows testing of designs for which:

- Not all combinations of the factor levels are feasible.

- The region of experimentation is irregularly shaped.

- Resource limitations restrict the number of experiments that can be performed.

- There is a nonstandard linear or a nonlinear model.

The OPTEX procedure can generate an efficient experimental design for any of these situations and website (or mobile app) multivariate testing is an ideal candidate because it applies:

- Constraints on the number of variants that are practical to test.

- Constraints on required or forbidden combinations of content.

The OPTEX procedure is highly flexible and has many input parameters and options. This means that it can cover different digital marketing scenarios, and it’s use can be tuned as circumstances demand. Customer Intelligence 360 provides the analytic heavy lifting behind the scenes, and the marketer only needs to make choices for business relevant parameters. Watch what happens when I press that Optimize button:

Suddenly that scary sample size of 1,070,000 has reduced to 142,502 visits to perform my test. The immediate benefit is the impractical multivariate test has become feasible. However, if only a subset of the combinations are being shown, how can the marketer understand what would happen for an untested variant? Simple! SAS Customer Intelligence 360 fits a model using the results of the tested variants and uses them to predict the outcomes for untested combinations. In this way, the marketer can simulate the entire multivariate test and draw reliable conclusions in the process.

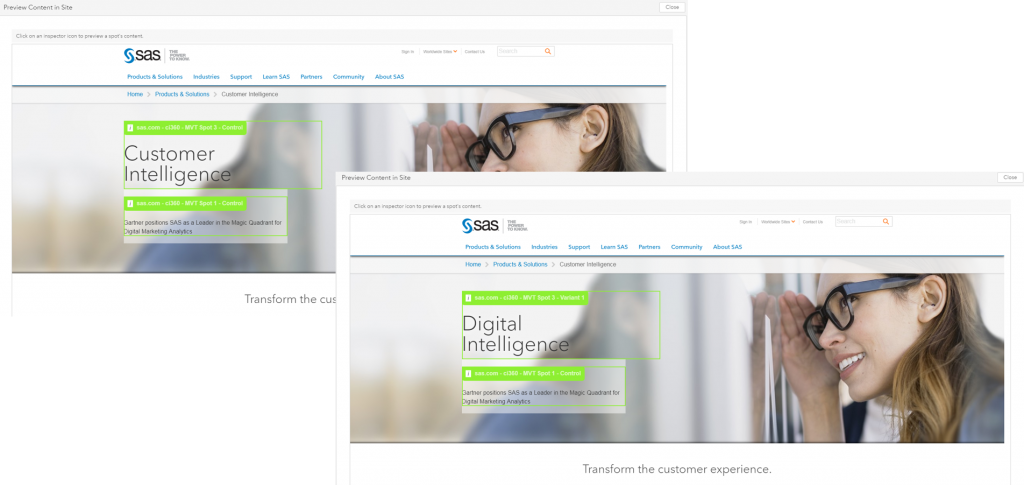

You may be thinking: So, we can dream big in the creative process and unleash our superpowers? That's right my friends, you can even preview as many variants of the test's recipe as you desire.

The essence of personalization centers on designing and delivering content that resonates with the audience. in order to generate higher engagement and conversion. Many solutions today are based on simple rules-based recommendations, segmentation and targeting--usually limited to a single customer touch point. This is not enough. Consider capabilities that integrate an array of predictive analytics and machine learning to contextualize digital customer experiences.

At the end of the day, connecting the dots between data science and testing, no matter which flavor you select, is a method I advocate. To every marketing analyst reading this:

Can you tell a good enough data story to inspire the creative minded?

2 Comments

You should also mention the MktEx macro. http://support.sas.com/rnd/app/macros/MktEx/MktEx.htm It can create full-factorial designs (also see PROC PLAN), fractional-factorial designs (also see PROC FACTEX), orthogonal arrays (no SAS procedure does this), nonorthogonal linear designs (also see PROC OPTEX). It can handle restrictions within observations (as can PROC OPTEX) and across observations (no procedure can do this). It uses all the procedures mentioned and does more. It can use a modified Fedorov algorithm (as can PROC OPTEX) and a coordinate exchange algorithm (no procedure can do this). For small designs, searching a full-factorial design from PROC PLAN by using PROC OPTEX works well. For many larger design problems, the coordinate exchange algorithm in MktEx works better. MktEx tries the FACTEX/PLAN/OPTEX strategy and other strategies. It then chooses the one that works best for further iteration. In its final stage it tries to improve the best design found so far. It can run with a NOQC option when SAS/QC is not available. It always requires SAS/IML. It can handle extremely complex restrictions. You have the full power of IML for operationalizing the restrictions.

Pingback: SAS Customer Intelligence 360: Hybrid marketing and analytic's last mile [Part 1] - Customer Intelligence Blog